What is Big Data Toolkit

The Big Data Toolkit (BDT) is an Esri Professional Services solution that allows customers to aggregate, analyze, and enrich big data within their existing big data environment.

Delivered as a term subscription that includes a set of spatial analysis and data interoperability tools that work with an existing big data environment.

BDT can help data scientists and analysts enhance their big data analytics with spatial tools that take advantage of the massive computing capacity they already have.

With Big Data Toolkit, you can:

Geospatially enable your existing big data cluster.

Speed up your spatial analysis on data stored within your existing big data infrastructure.

Configure your workflows with no coding required

Perform exploratory analysis with the Notebook environment capability

Share analysis results in ArcGIS to improve decision-making.

Use processors that package geospatial analytics libraries from Esri so they can run on your big data cluster, rather than in an ArcGIS environment.

Read data from the sources, protocols, and formats you already use to store your data.

Write analysis results back to your specific formats and data sinks.

Extend the API to solve your specific business problems.

Performance

BDT performance is measured by testing individual processors on massive datasets with a large spark cluster. Benchmark runtimes are produced in an Azure HDInsight cluster using 40 executors with 4 cores and 59G each. Data sources include delimited and parquet files on Azure Gen2 Blob store.

Often we need to enrich a point dataset with attributes from a polygon layer. Our workflow takes 600 million point locations, calculates which US Postal polygon contains each point, and tags the points with zipcode information.

| points | polygons | process | time |

|---|---|---|---|

| 600 million points (128GB) | US Zipcodes (26MB) | ProcessorPointInPolygon | 4.2 minutes |

Another common process is find the nearest line for each point. Our workflow enriches a point dataset with the name and distance to the nearest street segment. A required input to this process is radius, or how far away, in decimal degrees, to search for a street segment. Our input (0.001) searches within a city block for the nearest street. Larger values take longer to process.

| points | polylines | process | radius | time |

|---|---|---|---|---|

| 600 million points (128GB) | 50 million streets (50GB) | ProcessorDistance | 0.001 | 6.6 minutes |

System Requirements

- Apache Spark cluster on

- Cloudera/Hortonworks

- Azure HDInsight

- Amazon EMR

- Azure/AWS Databricks

- Google Dataproc

- Apache Spark 2.4.x is required.

- ArcGIS Pro 2.6 is recommended

- ArcGIS Enterprise (optional)

Setup

Azure Databricks Setup

This section covers how to install and setup Big Data Toolkit in Azure Databricks.

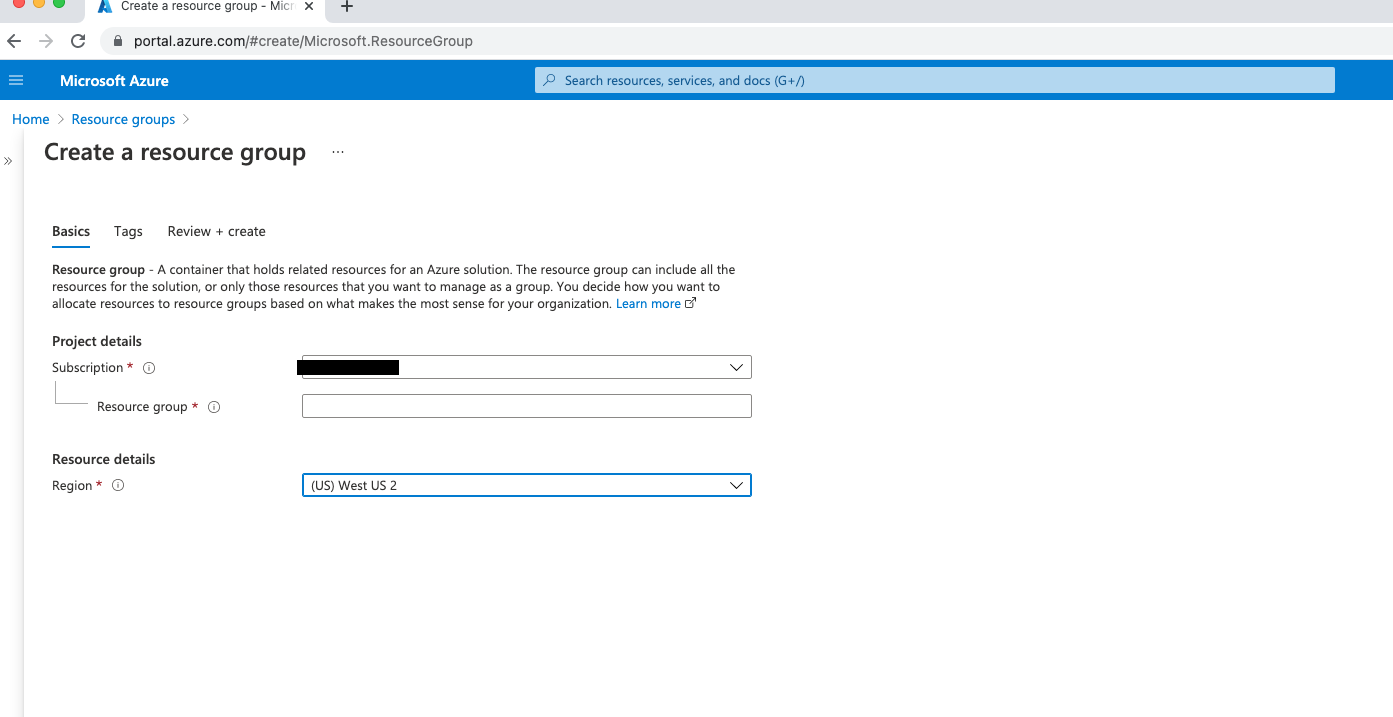

Create Resource Group

- Go to http://portal.azure.com. Under

Resource Groups. Add a new resource group.

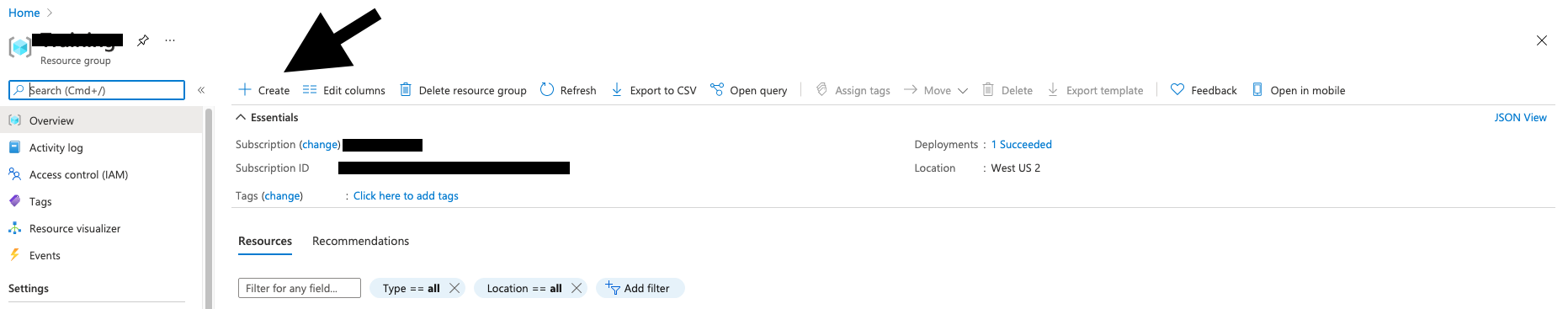

- Go to your resource group. Add a storage account in the same region as your resource group.

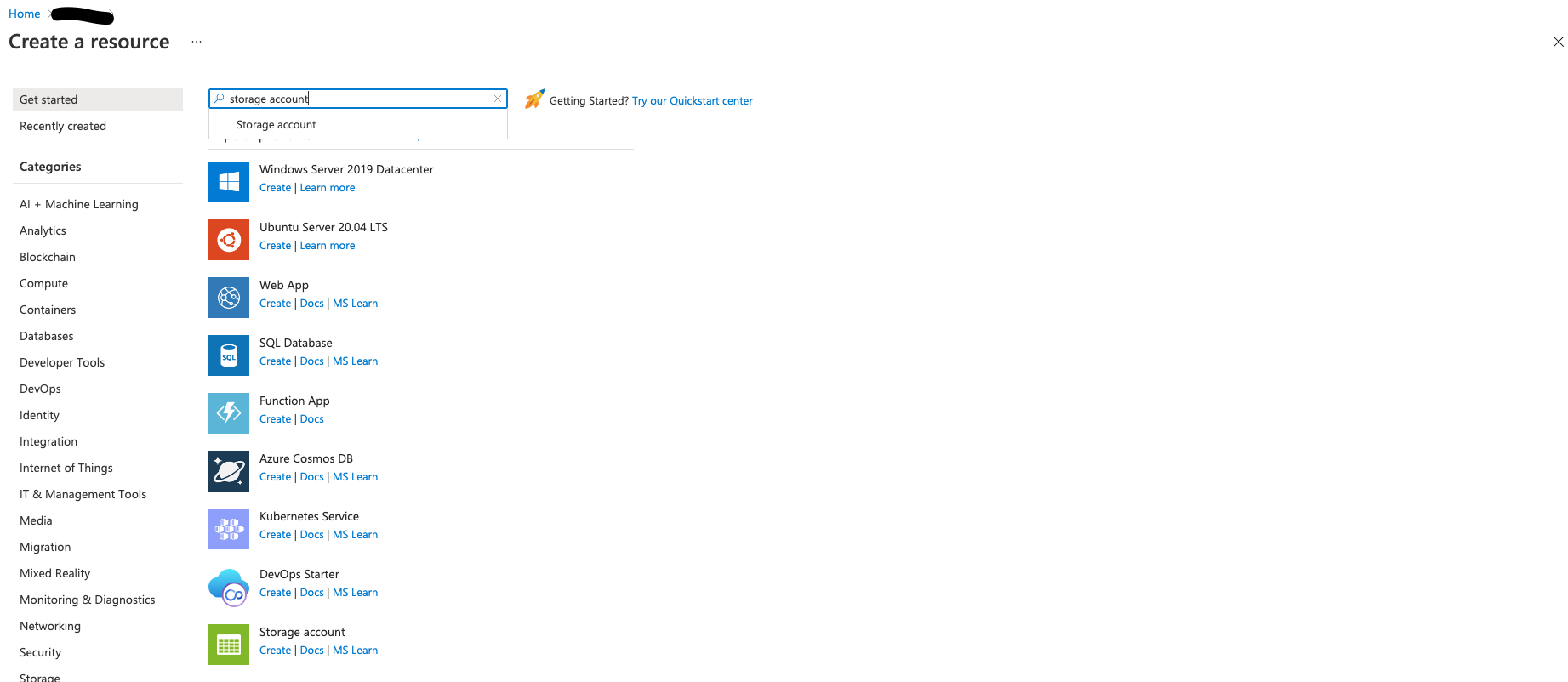

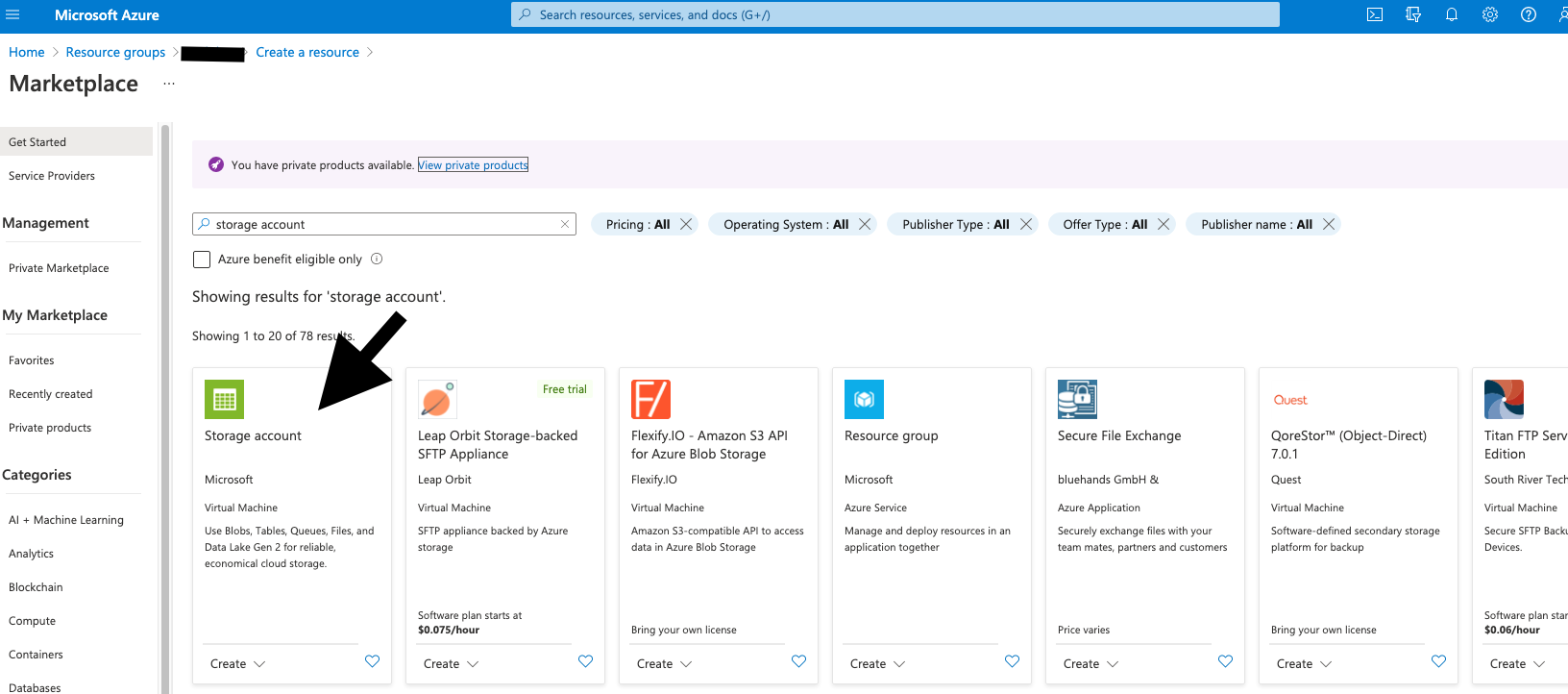

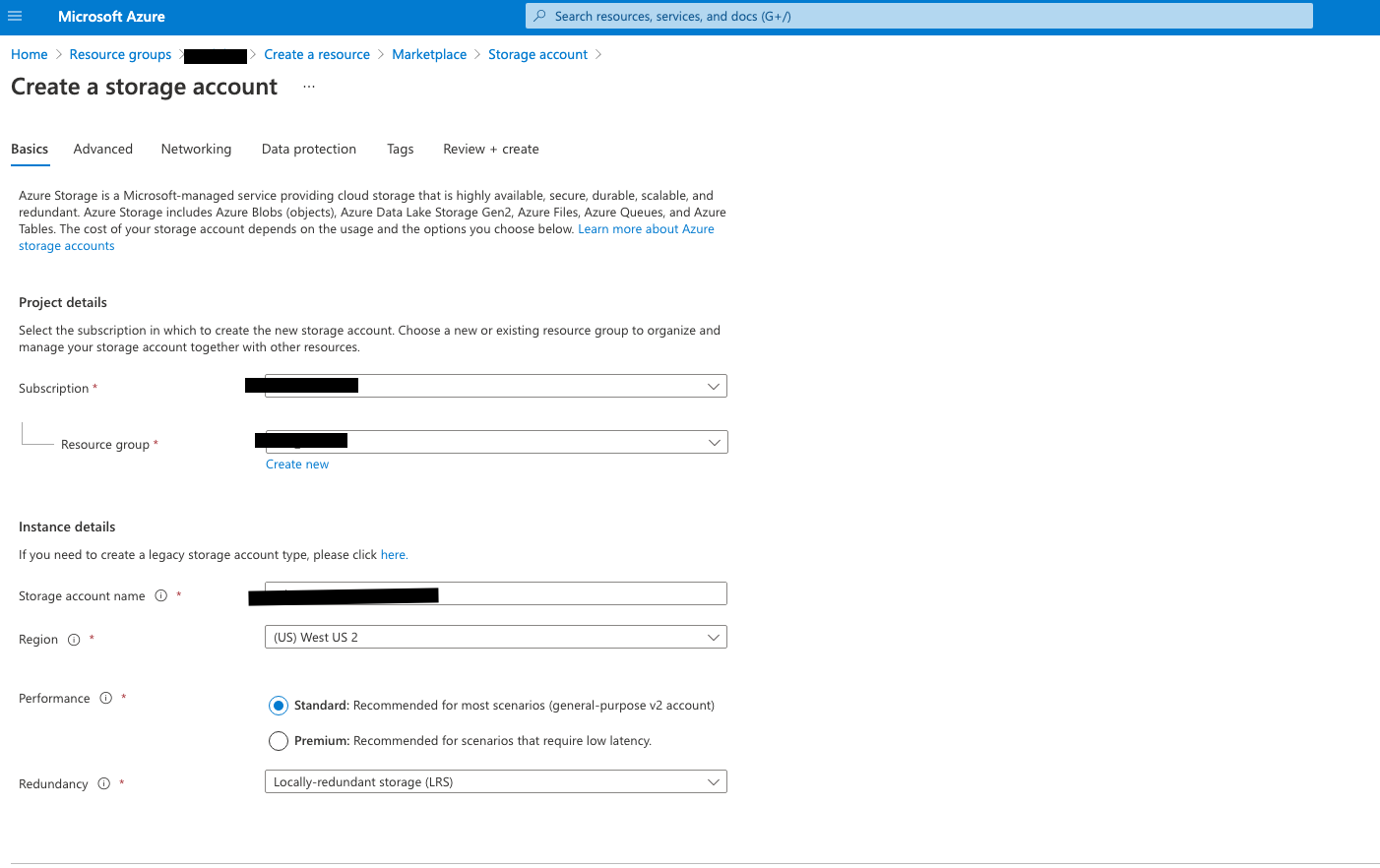

Create Storage Account

- Search for Storage Account and follow these steps:

- Use the same region, standard performance, and select LRS

- Make sure to enable hierarchial namespace

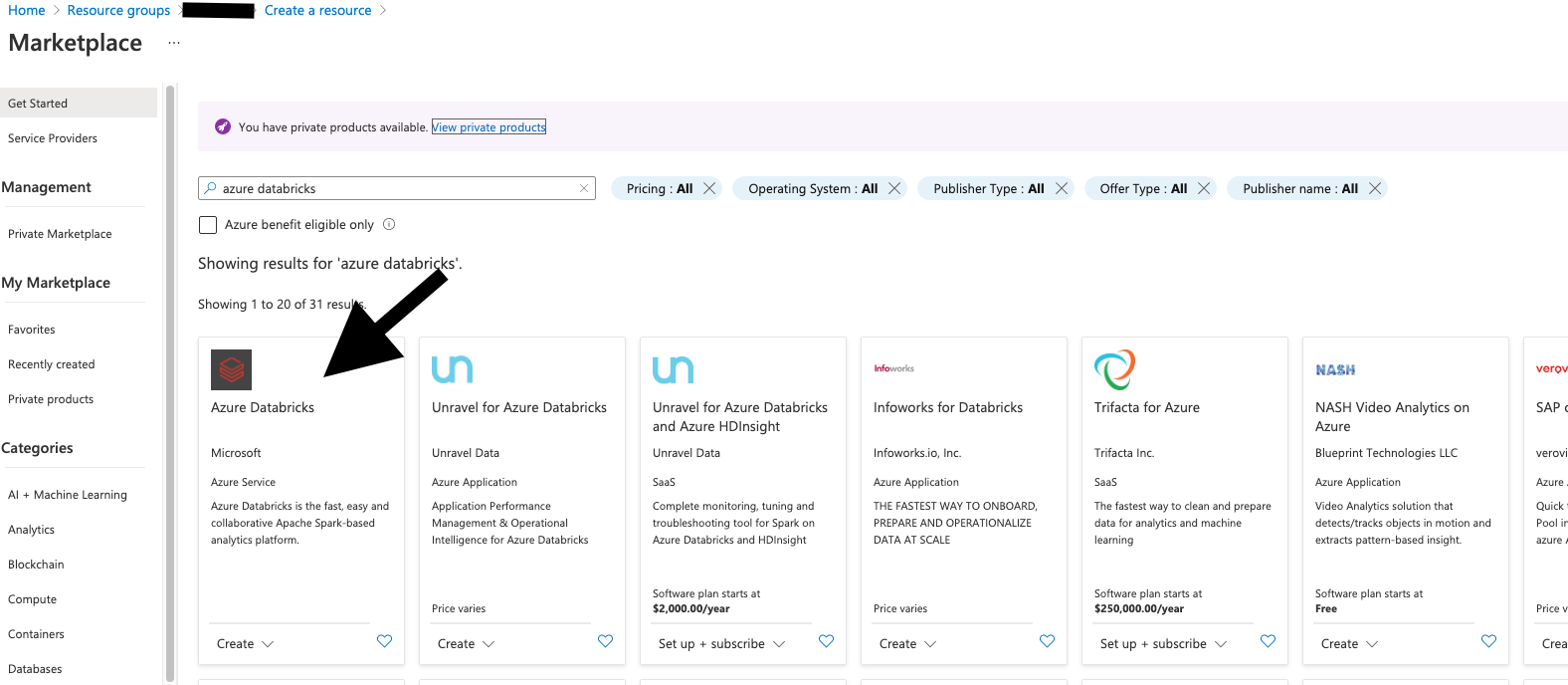

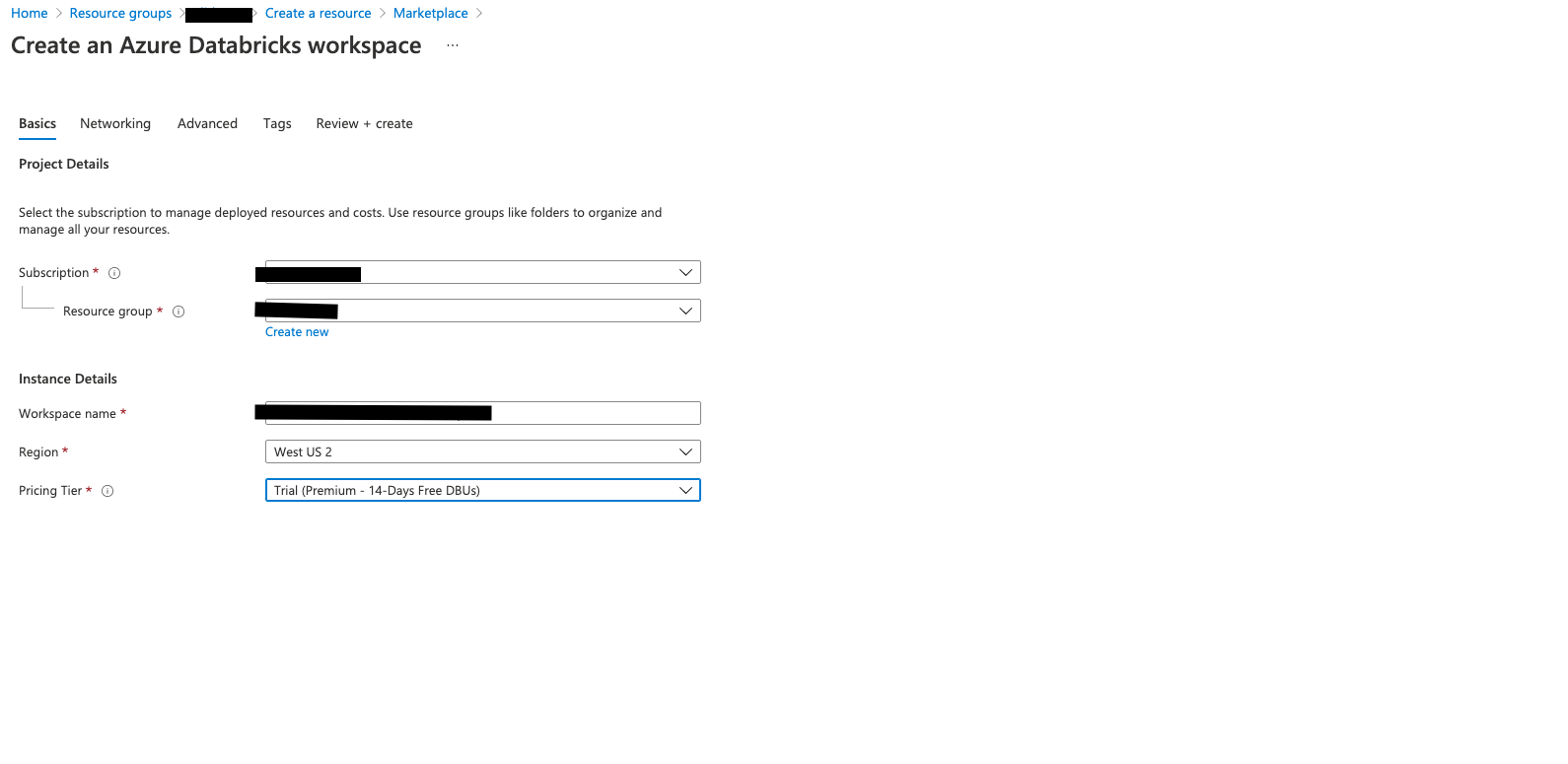

Add Databricks Service to Resource Group

- In your resource group, add

Azure Databricks Service.

- Use trial to save on costs and select the appropriate region

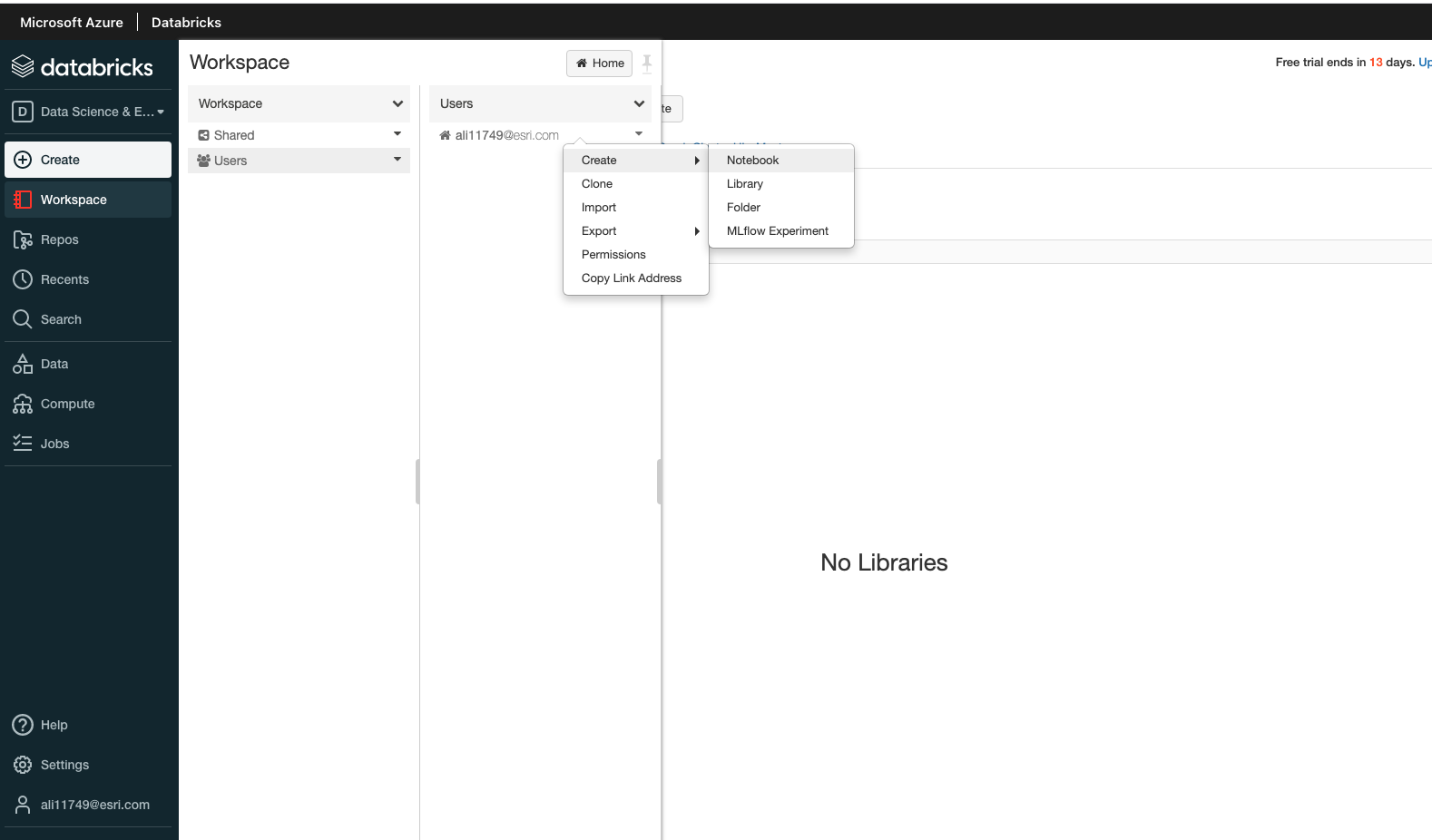

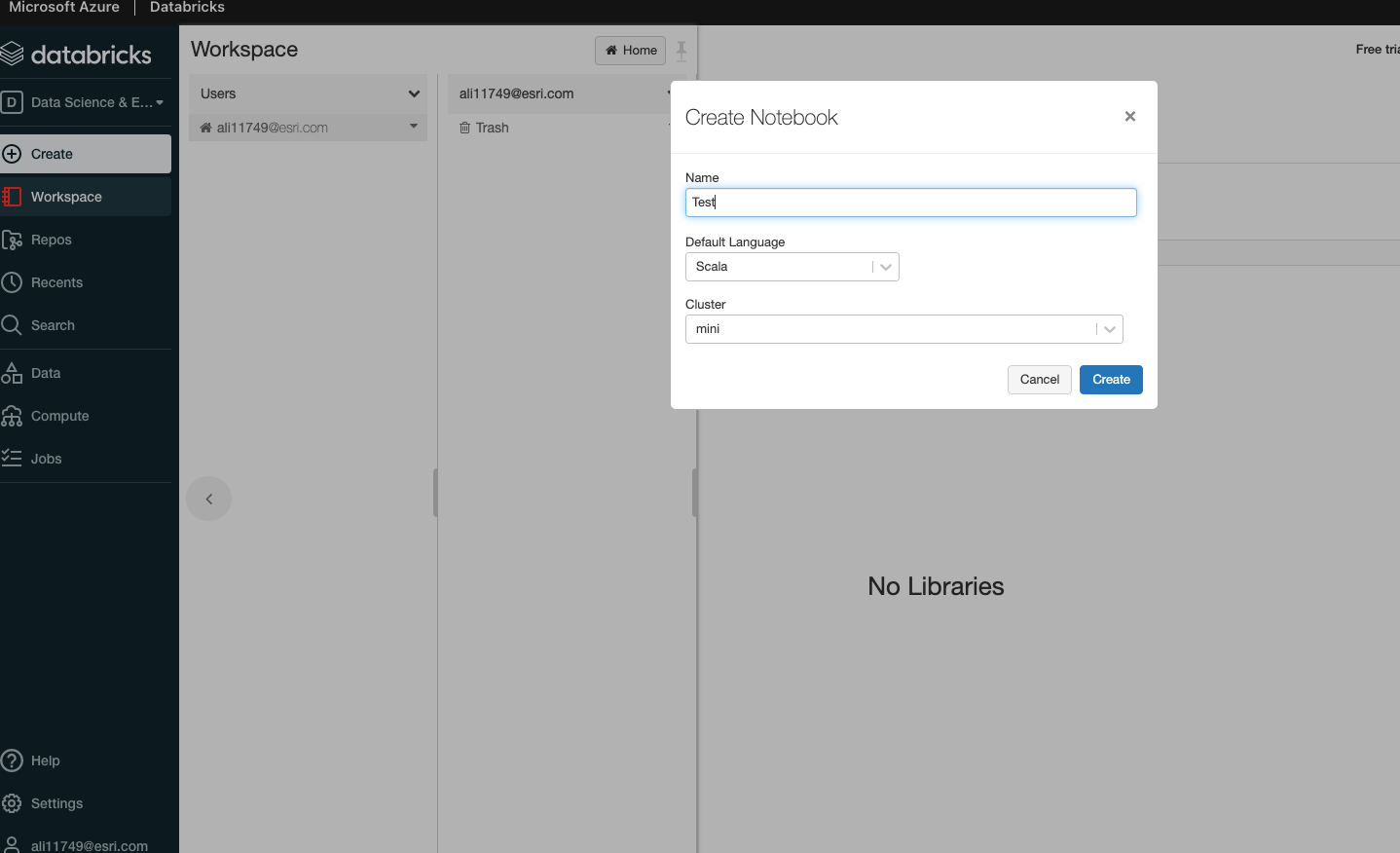

Add Notebook to Databricks Workspace

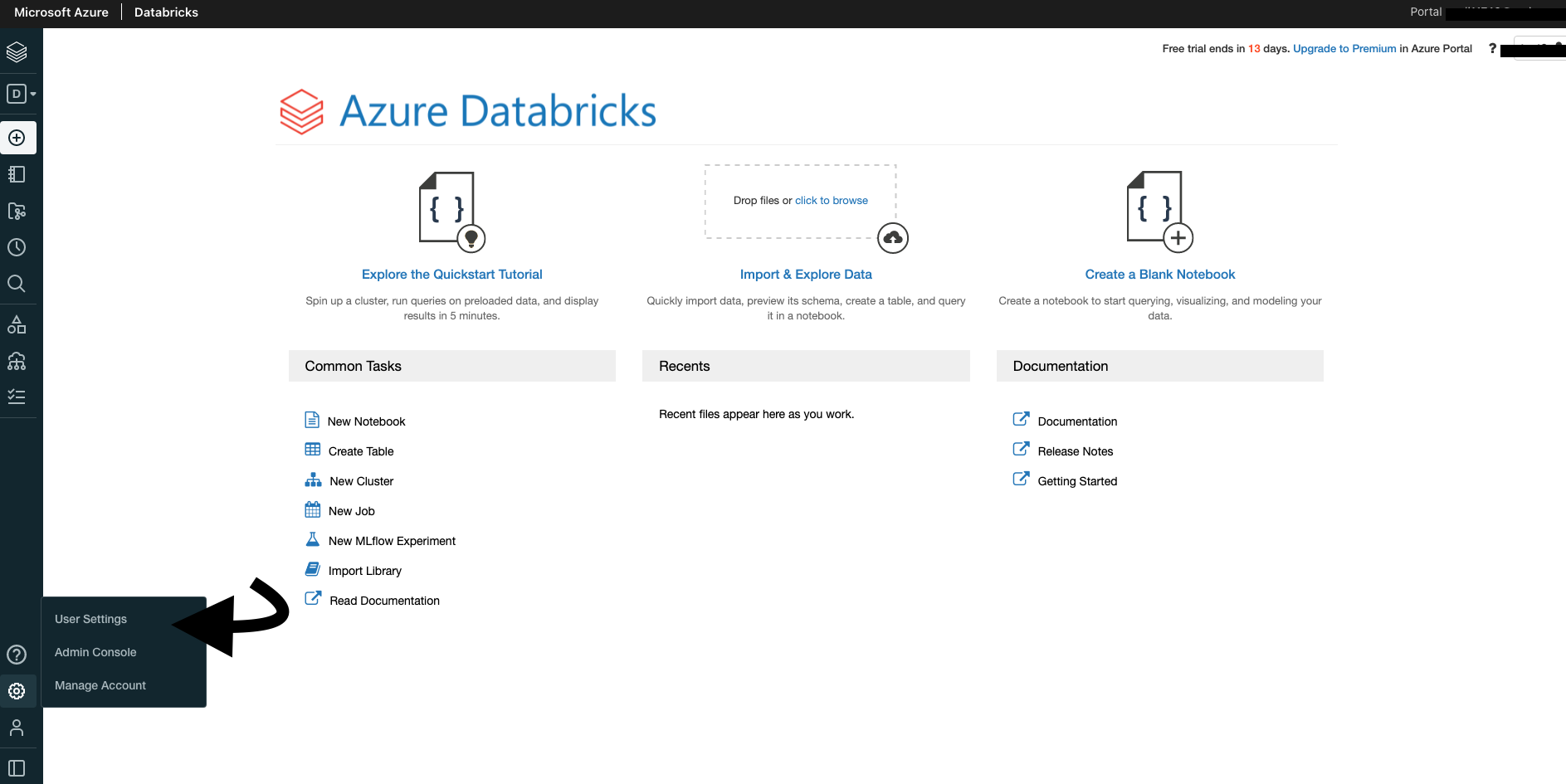

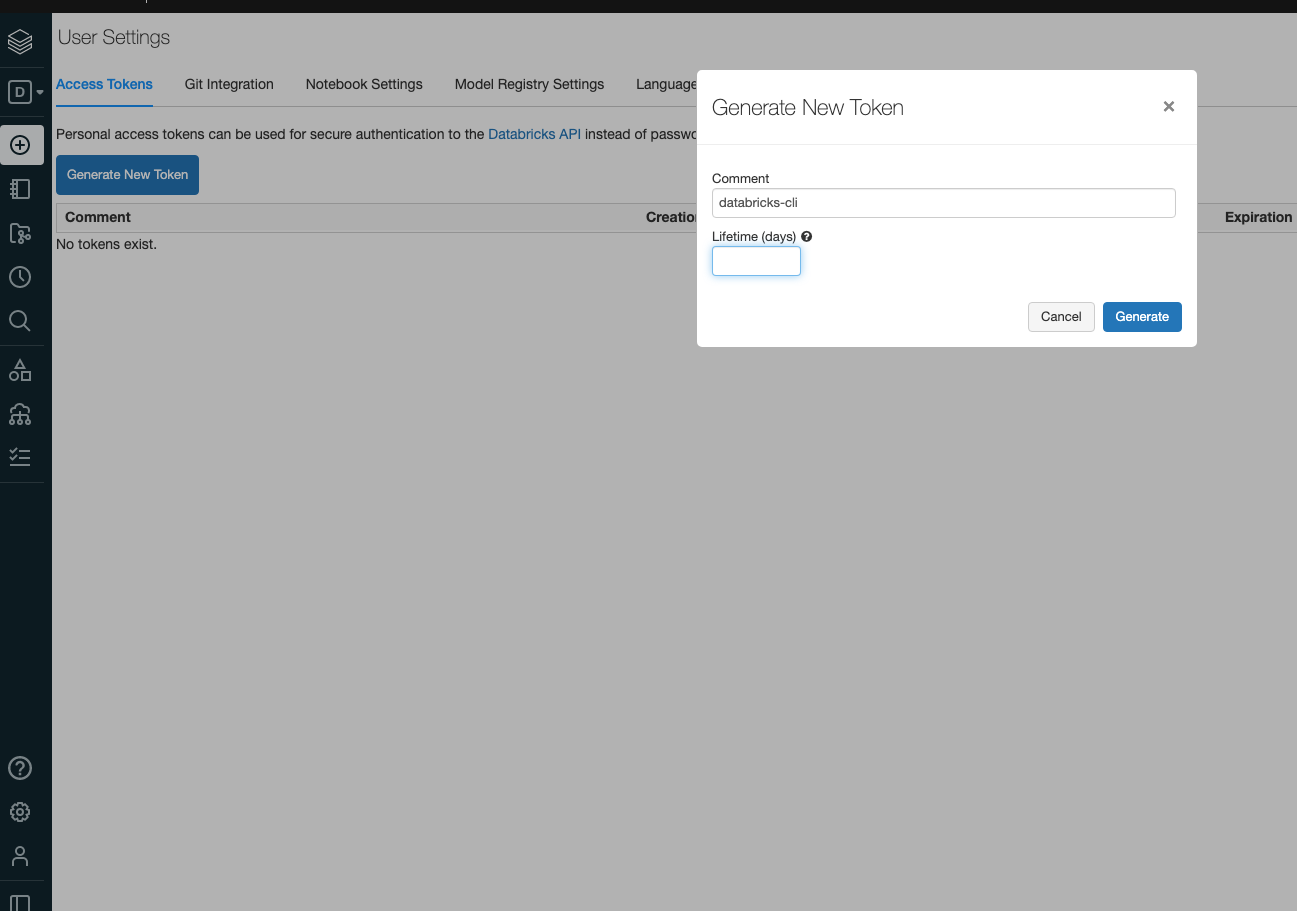

Install & configure Databricks CLI

- Launch Databricks workspace.

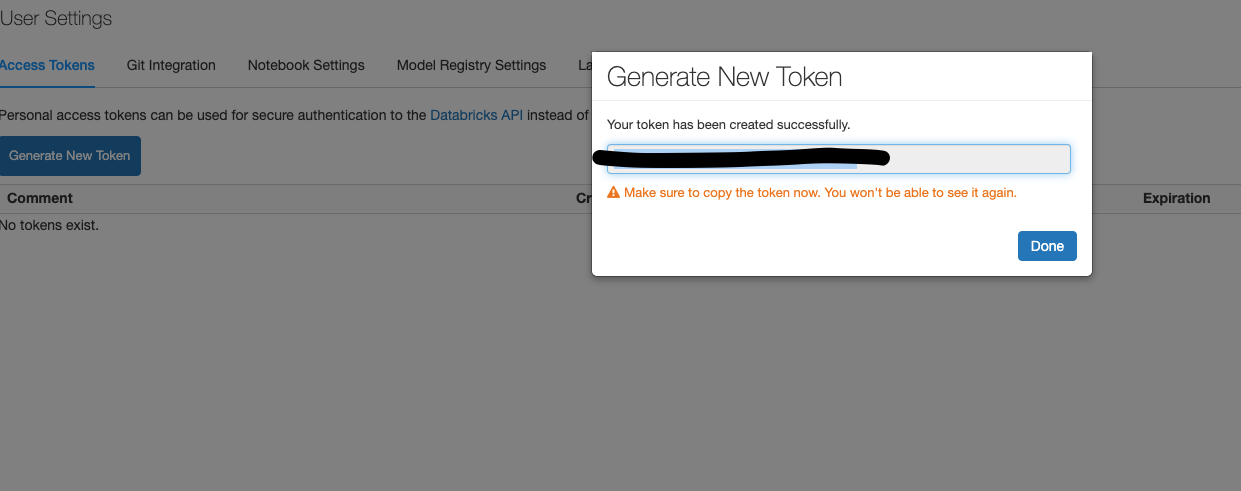

- Click on Generate New Token

- Save the token in a safe place

Execute

pip3 install databricks-cli.Set the following environment variables in your

.bash_profile.

export DATABRICKS_HOST=https://westus2.azuredatabricks.net

export DATABRICKS_TOKEN=YOUR TOKEN

- In your terminal, execute

source ~/.bash_profile.

Use Principal Key to setup DBFS

Setup of a service principal in your Azure subscription is a prerequisite

After this is setup, enter the following:

databricks secrets create-scope --scope adls --initial-manage-principal users

databricks secrets put --scope adls --key credential

Install Big Data Toolkit

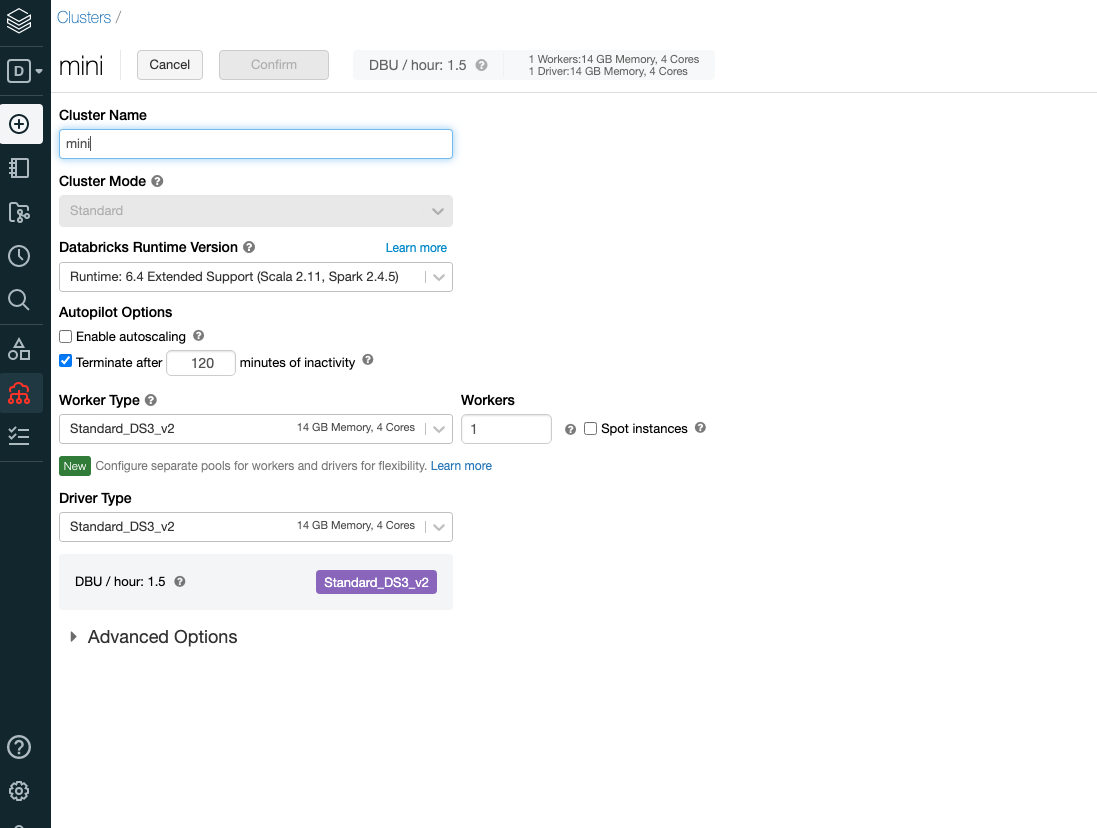

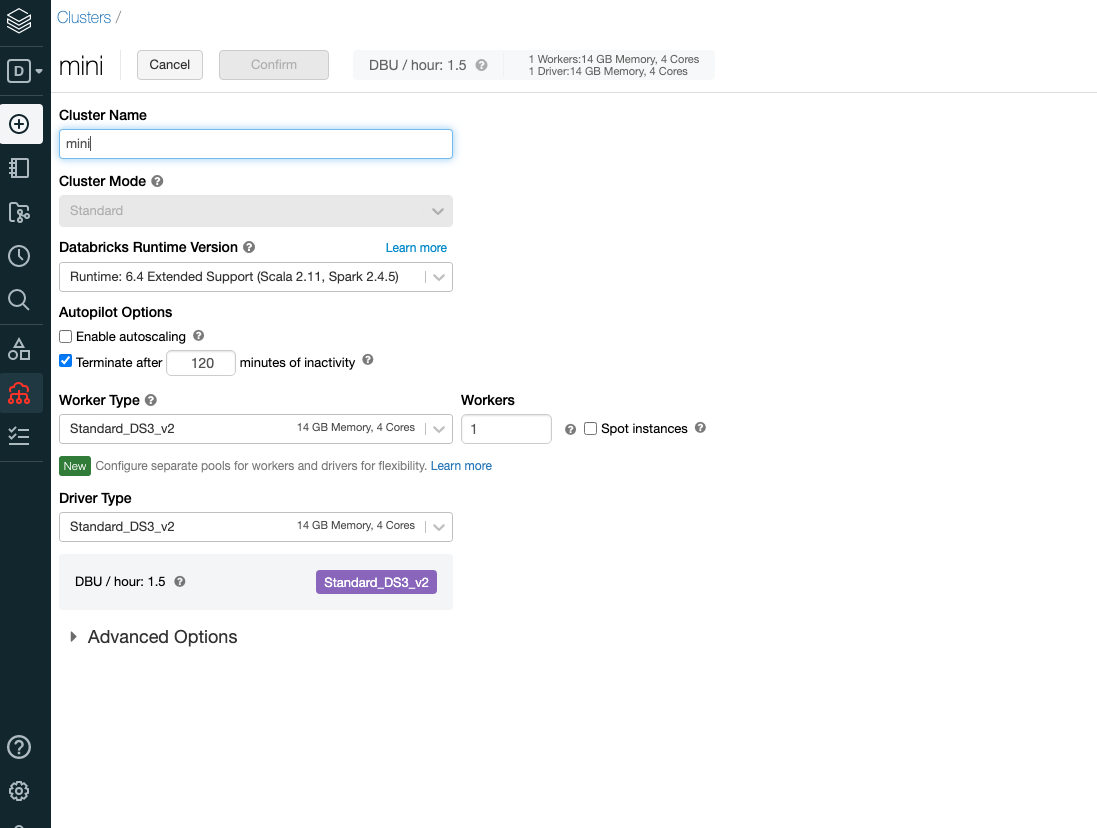

- Create a Cluster

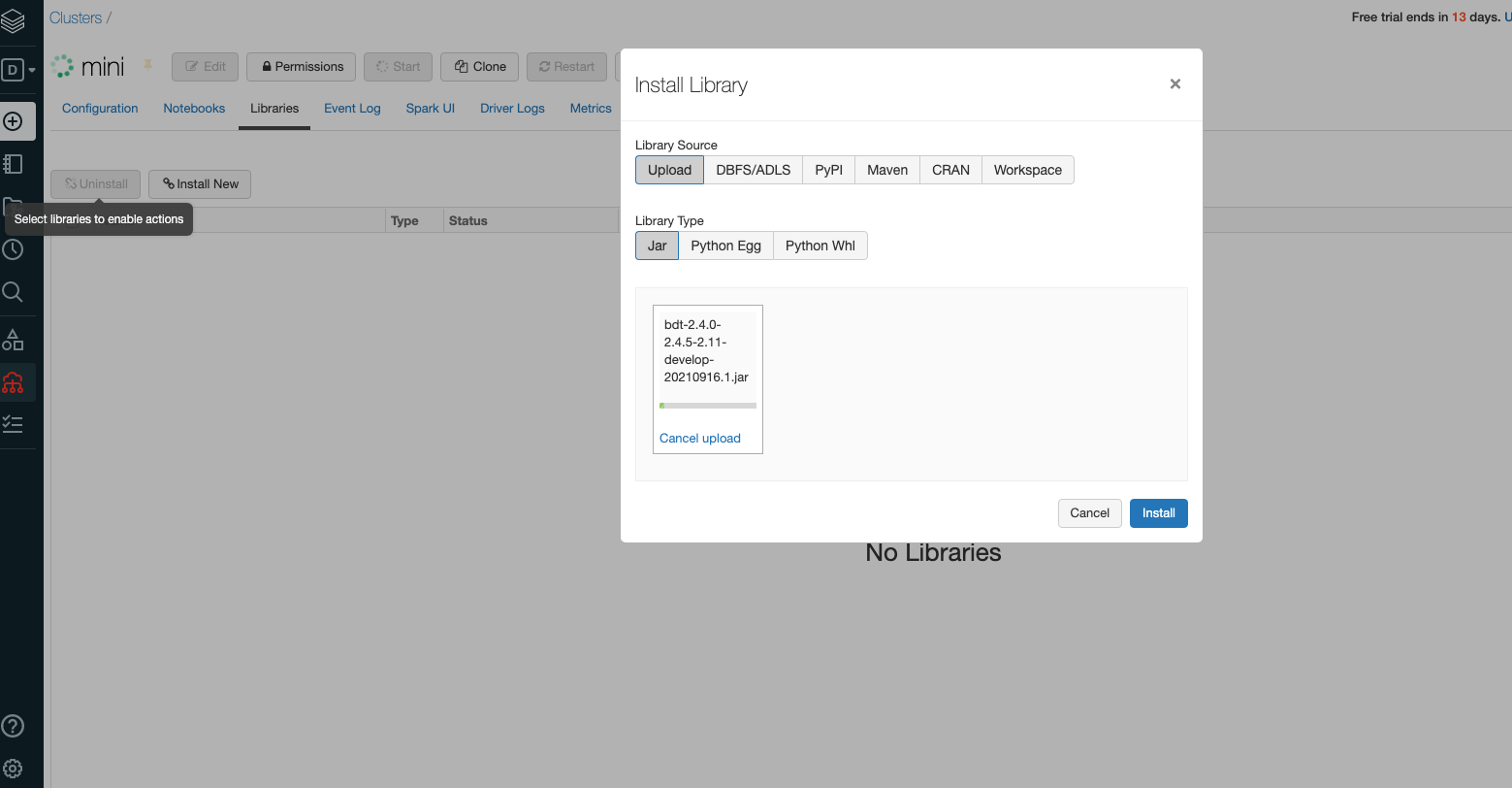

- Go to Libraries and Install new and upload the Jar file

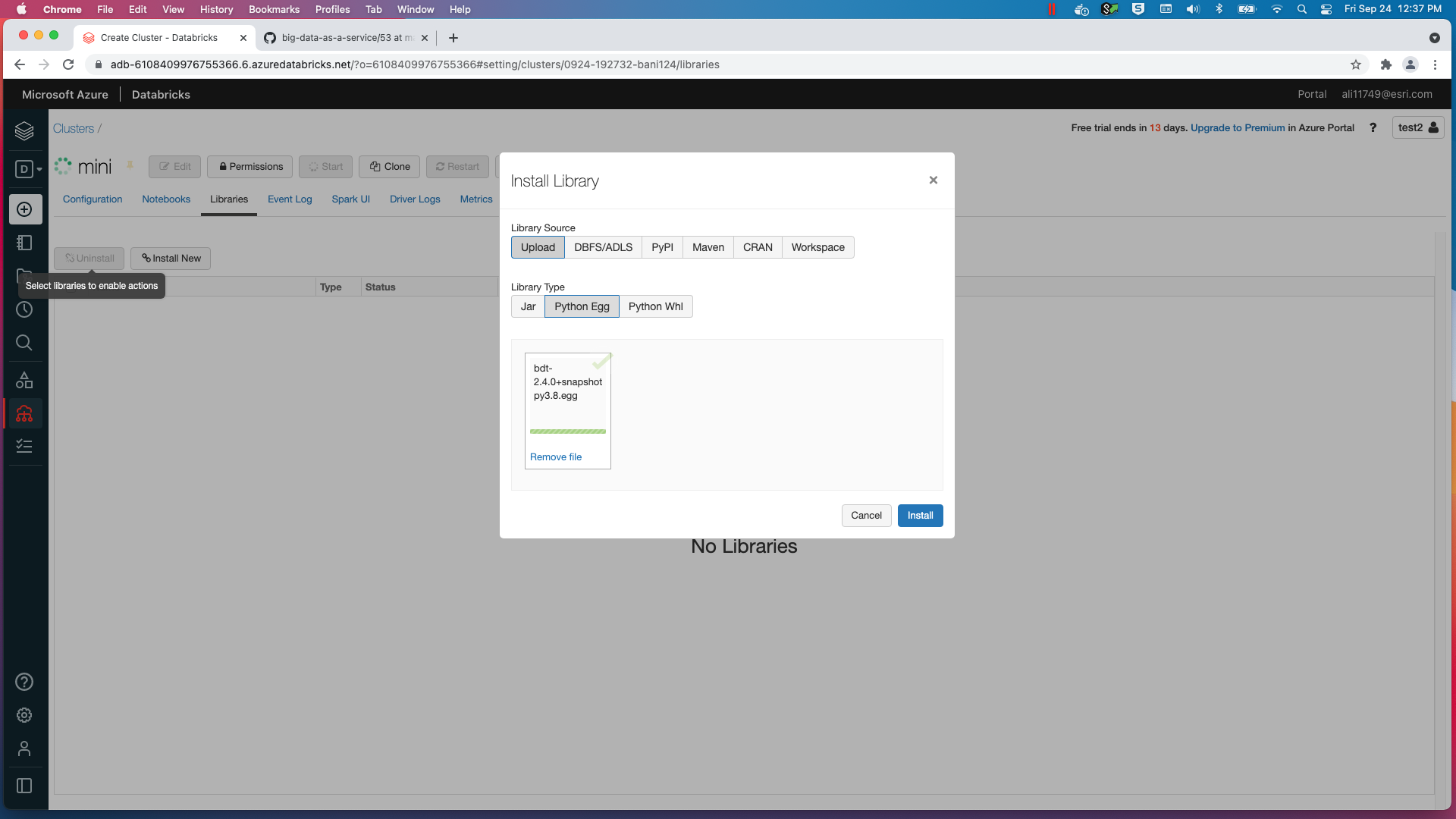

- Install the Python Egg using Pythpn egg and install 'deprecation' using PyPi

- BDT will not work on a High Concurrency cluster. Use a Standard cluster instead.

How to access Azure Blob Storage

- You can access data in a blob container by mounting:

Access Azure Data Lake Storage Gen2 using OAuth 2.0 with an Azure service principal

source = "abfss://rawdata@cdcvh.dfs.core.windows.net",

mountPoint = "/mnt/data",

extraConfigs = configs)

Local Jupyter Notebook Setup

This section covers how to use Big Data Toolkit with Jupyter Notebook locally.

Prerequisites

To setup Jupyter Notebook the following prerequisites are needed:

- Spark 2.4.5

- Java

- Pyspark

- Spark environment variables need to be configured

- Jupyter needs to be installed with a package manager such as pip

- Install deprecation

# Set the following environment variables in your bash or zsh profile to use Spark:

export SPARK_HOME={spark-location}/bin

export SPARK_LOCAL_IP=localhost

export PYSPARK_DRIVER_PYTHON=jupyter

export PYSPARK_DRIVER_PYTHON_OPTS='lab --ip=0.0.0.0 --port 8888 --allow-root --no-browser --NotebookApp.token=""'

# Install Jupyter:

pip install notebook

# Install deprecation:

pip install deprecation

Launch Jupyter Notebook with BDT

Start the Notebook with the PySpark driver

pyspark\

--conf spark.submit.pyFiles="/Users/{User}/Downloads/{Egg}"\

--conf spark.jars="/Users/{User}/Downloads/{Jar}"

Start using BDT with Jupyter Notebook

Walkthrough

Importing Big Data Toolkit

import bdt

spark.withBDT("/mnt/data/test.lic")

- Use a test license to authorize BDT

ST Functions

spark.sql("""

SHOW USER FUNCTIONS LIKE 'ST_*'

""").show(5)

+------------+

| function|

+------------+

| st_area|

|st_asgeojson|

| st_ashex|

| st_asjson|

| st_asqr|

+------------+

spark.sql("""

SHOW USER FUNCTIONS LIKE 'H3*'

""").show()

+----------------+

| function|

+----------------+

| h3distance|

| h3kring|

|h3kringdistances|

| h3tochildren|

| h3togeo|

| h3togeoboundary|

| h3toparent|

| h3tostring|

+----------------+

- Big Data Toolkit ST functions get their "ST" prefix from the PostGIS naming convention

- PostGIS Docs can be found here: postgis docs

- The functions are highly optimzied by the Apache Spark Catalyst engine

- Big Data Toolkit also includes functions that don't start with ST. Genereally speaking, a function that does have a shape as an input or output will not start with the "ST" label

- Examples of Non ST Functions in Big Data Toolkit include Uber H3 Functions and others

ST Function Examples

- Big Data Toolkit can be used either in SQL or in Python, depending on what you are the most comfortable with.

- Three different ways of producing the same output DataFrame are shown below.

Do it all in Spark SQL

- Create a temporary view of the spark DataFrame so that it can be referenced in a Spark SQL Statement

- Write the entire query in SQL

df1 = spark.range(10)

df1.createOrReplaceTempView("df1")

df1_o = spark.sql("""

SELECT *, ST_Buffer(ST_MakePoint(x, y), 100.0, 3857) AS SHAPE

FROM

(SELECT *, LonToX(lon) as x, LatToY(lat) as y

FROM

(SELECT id, 360-180*rand() AS lon, 360-180*rand() AS lat

FROM df1))

""")

df1_o.show()

+---+------------------+------------------+--------------------+--------------------+--------------------+

| id| lon| lat| x| y| SHAPE|

+---+------------------+------------------+--------------------+--------------------+--------------------+

| 0| 209.7420517387036|278.69107966694395|2.3348378397488922E7|-1.64374601454058...|{ ...|

| 1| 235.6965940336686|283.60464624333105|2.6237624829536907E7|-1.35615066742416...|{ ...|

| 2|253.00393806010976| 183.6534861987022|2.8164269553544343E7| -406980.1151084085|{ ...|

| 3| 260.1383894167863| 193.1159327466332|2.8958473045658957E7| -1472980.4194758015|{ ...|

| 4|339.14158668989427|319.97117910147915| 3.775306873714188E7| -4870131.33823664|{ ...|

| 5|196.72906017810777|350.15890505545616| 2.189977880326623E7| -1100932.2276620644|{ ...|

| 6| 308.3393018275092| 218.5508226001505| 3.432417407099181E7| -4657533.480692809|{ ...|

| 7|230.71769139172113| 319.8814906385373| 2.568337592272603E7| -4883178.711132153|{ ...|

| 8| 328.6877953661514| 183.3260391179804| 3.658935801012368E7| -370461.10529312206|{ ...|

| 9|290.42449965744925|189.24602659107038| 3.23299074157585E7| -1033759.5256822291|{ ...|

+---+------------------+------------------+--------------------+--------------------+--------------------+

Hybrid of Spark SQL and Python DataFrame API

- Using 'selectExpr' from the python DataFrame API allows for a hybrid approach

- This syntax allows for the use of SQL, but makes the query less complicated to write

df2 = spark.range(10)\

.selectExpr("id","360-180*rand() lon","180-90*rand() lat")\

.selectExpr("*","LonToX(lon) x","LatToY(lat) y")\

.selectExpr("*","ST_Buffer(ST_MakePoint(x,y), 100.0, 4326) SHAPE")

df2.show()

+---+------------------+------------------+--------------------+--------------------+--------------------+

| id| lon| lat| x| y| SHAPE|

+---+------------------+------------------+--------------------+--------------------+--------------------+

| 0| 272.2434268912087| 99.48671731291171| 3.030599965334515E7|1.5876415679293292E7|{ ...|

| 1|357.53193763641457|158.27029821614542| 3.980027324001811E7| 2479103.475519385|{ ...|

| 2|265.19248738341645|134.20606970025696|2.9521092657723542E7| 5747387.678019282|{ ...|

| 3|328.91483911816016|109.22908582796377|3.6614632404985085E7|1.1324393353312327E7|{ ...|

| 4| 198.1155773828232| 99.50944059423078|2.2054125192471266E7|1.5861086452229302E7|{ ...|

| 5|183.39843143144566| 113.097849987619| 2.041581999923363E7|1.0128237129727399E7|{ ...|

| 6| 241.2867789263583|170.76182038746066|2.6859921365231376E7| 1032874.515275041|{ ...|

| 7|192.59185824421752| 98.05268650607889| 2.143922759067662E7| 1.692579219007473E7|{ ...|

| 8|244.69326236261554|175.35379731732678|2.7239129366751254E7| 517780.7017250775|{ ...|

| 9|317.54181519860066|157.47594852504096|3.5348593173480004E7| 2574561.293889059|{ ...|

+---+------------------+------------------+--------------------+--------------------+--------------------+

Processors

- Processors are highly optimized functions that are typically designed to handle more complex tasks than ST Functions.

- However, some simpler ST Functions still have a processor equivalent (Like STArea and ProcessorArea)

- Processors require that input dataframes have metadata for their shape columns.

- Adding metadata gives the processor information about the geometry type and spatial reference (wkid)

- An error will be thrown if a processor receives the wrong geometry type

- Some processors require wkid 3857, like distanceAllCandidates

Call ProcessorArea on df1:

- Calling a processor is relatively straightforward, pass the dataframe(s) as the first parameters and optional paramters after that

- This will calcualte the area of each buffered point, which should identical (up to a certain floating point precision)

df1_m = df1_o.withMeta("POLYGON", 3857)

area = P.area(df1_m)

area.show(5)

+---+------------------+------------------+--------------------+--------------------+--------------------+------------------+

| id| lon| lat| x| y| SHAPE| shape_area|

+---+------------------+------------------+--------------------+--------------------+--------------------+------------------+

| 0| 209.7420517387036|278.69107966694395|2.3348378397488922E7|-1.64374601454058...|{ ...| 31393.50203038831|

| 1| 235.6965940336686|283.60464624333105|2.6237624829536907E7|-1.35615066742416...|{ ...| 31393.50203038831|

| 2|253.00393806010976| 183.6534861987022|2.8164269553544343E7| -406980.1151084085|{ ...| 31393.50203039233|

| 3| 260.1383894167863| 193.1159327466332|2.8958473045658957E7| -1472980.4194758015|{ ...| 31393.50203039616|

| 4|339.14158668989427|319.97117910147915| 3.775306873714188E7| -4870131.33823664|{ ...|31393.502030451047|

+---+------------------+------------------+--------------------+--------------------+--------------------+------------------+

Concepts

Shape Column

Format

spark.sql("SELECT ST_MakePoint(-47.36744,-37.11551) AS SHAPE").printSchema()

root

|-- SHAPE: struct (nullable = false)

| |-- WKB: binary (nullable = true)

| |-- XMIN: double (nullable = true)

| |-- YMIN: double (nullable = true)

| |-- XMAX: double (nullable = true)

| |-- YMAX: double (nullable = true)

Shape is stored as a struct for easier handling and processing

The struct is composed of WKB, XMIN, YMIN, XMAX, and YMAX values

Transform Shape struct to WKT before persisting to csv

Parquet can store the Shape struct without additional transformation

Methods

df = (spark

.read

.format("com.esri.spark.shp")

.options(path="/data/poi.shp", format="WKB")

.load()

.selectExpr("ST_FromWKB(shape) as SHAPE")

.withMeta("Point", 4326))

df.selectExpr("ST_AsText(SHAPE) WKT").write.csv("/tmp/sink/wkt")

+---------------------------+

|WKT |

+---------------------------+

|POINT (-47.36744 -37.11551)|

+---------------------------+

Shape structs can be created using multiple methods:

Processors

- ProcessorAddShapeFromGeoJson

- ProcessorAddShapeFromJson

- ProcessorAddShapeFromWKB

- ProcessorAddShapeFromWKT

- ProcessorAddShapeFromXY

SQL functions

- ST_MakePoint

- ST_FromXY

- ST_MakeLine

- ST_MakePolygon

- ST_FromText

- ST_FromWKB

- ST_FromFGDBPoint

- ST_FromFGDBPolygon

- ST_FromFGDBPolyline

- ST_FromGeoJSON

- ST_FromHex

- ST_FromJSON

Export

Transform Shape structs into formats suitable for data interchange:

Metadata

# Python

df1 = (spark

.read

.format("csv")

.options(path="/data/taxi.csv", header="true", delimiter= ",", inferSchmea="false")

.schema("VendorID STRING,tpep_pickup_datetime STRING,tpep_dropoff_datetime STRING,passenger_count STRING,trip_distance STRING,pickup_longitude DOUBLE,pickup_latitude DOUBLE,RatecodeID STRING,store_and_fwd_flag STRING,dropoff_longitude DOUBLE,dropoff_latitude DOUBLE,payment_type STRING,fare_amount STRING,extra STRING,mta_tax STRING,tip_amount DOUBLE,tolls_amount DOUBLE,improvement_surcharge DOUBLE,total_amount DOUBLE")

.load()

.selectExpr("tip_amount", "total_amount", "ST_FromXY(pickup_longitude, pickup_latitude) as SHAPE")

.withMeta("Point", 4326, shapeField = "SHAPE"))

df1.schema[-1].metadata

{'type': 33, 'wkid': 4326}

Metadata is set for the dataframe and describes the Geometry

Describes the Shapefield column with Shape struct

Includes the geometry type and spatial reference (wkid)

Parquet may persist metadata but must always be specified when reading csv.

Can be set on Sources by specifying geometryType, shapeField, and wkid or on an existing dataframe using ProcessorAddMetadata

Cell size

points_in_county = spark.bdt.pointInPolygon(

points,

county,

cellSize = 0.1,

)

Cell size represents the spatial partitioning grid size

Specified in the units of the spatial reference e.g. degrees for WGS84, meters for Web Mercator

Cell size is an optimization parameter used to improve efficiency of processing and is based on your data size and distribution, size of the cluster, and analysis workflow.

Setting

doBroadcast = Trueignores cellSize values during processing

Cell size must not be smaller than the following values:

For Spatial Reference WGS 84 GPS: 0.000000167638064 degrees For Spatial Reference WGS 1984 Web Mercator: 0.0186264515 meters

Cache

# Python

points_in_county = spark.bdt.pointInPolygon(

points,

county,

cellSize = 0.1,

cache = True,

)

points_in_cy_zp = spark.bdt.pointInPolygon(

points_in_county,

zip_codes,

cellSize = 0.1,

cache = True, #Setting cache here substantially reduces total run time

)

pssoverlaps = spark.bdt.powerSetStats(

points_in_county_zp,

statsFields = ["TotalInsuredValue"],

geographyIds = ["CY_ID","ZP_ID"],

categoryIds = ["Quarter","LineOfBusiness"],

idField = "Points_ID",

cache = False

)

pssoverlaps.cache() #same as setting cache = True above

pssoverlaps.write.mode("overwrite").parquet("/tmp/sink")

pssoverlaps.write.mode("overwrite").csv("/tmp/csv")

Cache uses DataFrame API method, all processors have this option with the default being set to false, using cache consumes memory but increases the efficiency of multistep workflows

As shown in the example on right keeping false instead of true can greatly increase the time it takes to run downstream steps

With multiple sinks there is a benefit to setting cache to true so that it can store all information and write it out to multiple locations

Calling the DataFrame cache method is the same as setting cache to True

Extent

An array of xmin, ymin, xmax, ymax that contains the spatial index

In units of the spatial reference e.g. degrees for WGS84, meters for Web Mercator, etc

Defaults to World Geographic [-180.0, -90.0, 180.0, 90.0]

When using a projected coordinate system, the extent must be specified

Try to tailor the extent for the data to reduce processing of empty areas and improve performance

LMDB

LMDB stands for Lightning Memory-Mapped Database and is used by BDT for routing and street network capabilities

LMDB deploys copies to every spark node with fast read access

Processors:

SQL Functions:

BDT v2

The title version number, for example ProcessorArea v2.1, indicates the version first available.

ProcessorArea was available starting in v2.1 while v2 indicates available since v2.0

If new Processor configuration options are added the version availability is noted next to the property e.g. - emitPippedOnly (_v2.1_)

Sources v2

source can be thought of as the componenent that describes that data that the spark application will be consuming.

SourceGeneric v2

Hocon

# csv

{

name = taxi

type = com.esri.source.SourceGeneric

format = csv

options = {

path = ./src/test/resources/taxi.csv

header = true

delimiter = ","

inferSchema = false

}

schema = "VendorID STRING,tpep_pickup_datetime STRING,tpep_dropoff_datetime STRING,passenger_count STRING,trip_distance STRING,pickup_longitude DOUBLE,pickup_latitude DOUBLE,RatecodeID STRING,store_and_fwd_flag STRING,dropoff_longitude DOUBLE,dropoff_latitude DOUBLE,payment_type STRING,fare_amount STRING,extra STRING,mta_tax STRING,tip_amount DOUBLE,tolls_amount DOUBLE,improvement_surcharge DOUBLE,total_amount DOUBLE,SHAPE) as SHAPE", "tip_amount", "total_amount"]

where = "VendorID = '2'"

geometryType = point

shapeField = SHAPE

wkid = 4326

cache = false

numPartitions = 8

}

Hocon

# parquet

{

name = taxi

type = com.esri.source.SourceGeneric

format = parquet

options = {

path = ./src/test/resources/taxi.parquet

mergeSchema = true

}

where = "VendorID = '2'"

geometryType = point

shapeField = SHAPE

wkid = 4326

cache = false

numPartitions = 8

}

Hocon

# JDBC

{

name = service_area

type = com.esri.source.SourceGeneric

format = jdbc

options = {

url = "jdbc:sqlserver://localhost:1433;databaseName=bdt2;integratedSecurity=false"

user = "you"

password = "your secret"

// Microsoft Spatial Type function.

query = "SELECT *, Shape.STAsBinary() WKB FROM BFS_HEXAGON_SPLIT_ONE_T_2"

}

// BDT Spatial Type function.

selectExpr = ["M_ID", "ST_FromWKB(WKB) SHAPE"]

geometryType = Polygon

shapeField = SHAPE

wkid = 3857

}

# Python

# Deprecated as of v2.4. Use the Spark API instead.

spark.bdt.sourceGeneric(

"csv",

{"path": "./src/test/resources/taxi.csv", "header": "true", "delimiter": ",", "inferSchema": "true"},

schema = "VendorID STRING,tpep_pickup_datetime STRING,tpep_dropoff_datetime STRING,passenger_count STRING,trip_distance STRING,pickup_longitude DOUBLE,pickup_latitude DOUBLE,RatecodeID STRING,store_and_fwd_flag STRING,dropoff_longitude DOUBLE,dropoff_latitude DOUBLE,payment_type STRING,fare_amount STRING,extra STRING,mta_tax STRING,tip_amount DOUBLE,tolls_amount DOUBLE,improvement_surcharge DOUBLE,total_amount DOUBLE, SHAPE STRING",

selectExpr = ["ST_FromXY(pickup_longitude, pickup_latitude) as SHAPE", "tip_amount", "total_amount"],

where = "VendorID = '2'",

geometryType = "Point",

shapeField = "SHAPE",

wkid = 4326,

cache = True,

numPartitions = 8)

# Spark API

spark\

.read\

.format("csv")\

.options(path="./src/test/resources/taxi.csv", header="true", delimiter= ",", inferSchmea="true")\

.schema("VendorID STRING,tpep_pickup_datetime STRING,tpep_dropoff_datetime STRING,passenger_count STRING,trip_distance STRING,pickup_longitude DOUBLE,pickup_latitude DOUBLE,RatecodeID STRING,store_and_fwd_flag STRING,dropoff_longitude DOUBLE,dropoff_latitude DOUBLE,payment_type STRING,fare_amount STRING,extra STRING,mta_tax STRING,tip_amount DOUBLE,tolls_amount DOUBLE,improvement_surcharge DOUBLE,total_amount DOUBLE, SHAPE STRING")\

.load()\

.selectExpr("ST_FromXY(pickup_longitude, pickup_latitude) as SHAPE", "tip_amount", "total_amount")\

.where("VendorID = '2'")\

.withMeta("Point", 4326, shapeField = "SHAPE")\

.repartition(8)\

.cache()

Read dataset from the external storage.

Source Generic is a BDT-2 Module for reading in generic file types that are already spark supported.

If the input data has a shape column: - Specify a geometryType, shapeField, and wkid. This will set the metadata of the shape column. - If the shape column is WKT, then use ST_FromText on the shape column in selectExpr - If the shape column is WKB, then use ST_FromWKB on the shape column in selectExpr - If the shape column is shape struct, then no ST function is needed.

If the input data does not have shape struct column, do not provide geometryType, shapeField, and wkid. These values will default to None.

Configuration

Required

format

- description: Supported formats by Spark DataFrameReader

- type: String

options

- description: Lists Spark options for each format

- type: Map[String, String]

Optional

schema

- description: Specifies the schema by using the input DDL-formatted string.

- type: String

- default value: None

selectExpr

- description: Select a subset of the fields.

- type:

- default value:

where

- description: Where clause to filter the data.

- type: String

- default value: None

geometryType (v2.2)

- description: The geometry type to be read. For a standalone table, use None.

- type: String

- default value: None

shapeField (v2.2)

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

wkid (v2.2)

- description: The spatial reference id.

- type: String

- default value: 4326

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

numPartitions

- description: Re-partitions the outgoing DataFrame.

- type: Int

- default value: None

References

- databricks-sources

- hive-warehouse-connector

- hive-warehouse

- move-hive-tables

- accessing-hive-from-spark-sql

- hdinsight-hadoop

- hive-support-in-spark-shell

- hdinsight-versin-release

- hdinsight-hive-connector

SourceSQL v2

Hocon

{

name = zip

type = com.esri.source.SourceSQL

sql = "select 'hello' as world"

cache = false

}

Hocon

{

name = zip

type = com.esri.source.SourceSQL

sql = "SELECT * FROM parquet.`examples/src/main/resources/users.parquet`"

cache = false

}

Hocon

# Example for shape column with WKB values

{

name = zip

type = com.esri.source.SourceSQL

sql = "SELECT ST_FromWKB(SHAPE) FROM parquet.`examples/src/main/resources/users.parquet`"

geometryType = polygon

shapeField = SHAPE

wkid = 4326

cache = false

}

# Python

# Deprecated as of v2.4. Use the Spark API instead.

spark.bdt.sourceSQL(

"SELECT * FROM parquet.`./src/test/resources/States_st.parquet`",

geometryType = "Polygon",

shapeField = "SHAPE",

wkid = 4326,

cache = True)

# Spark API

spark\

.sql("SELECT * FROM parquet. `./src/test/resources/States_st.parquet`")\

.withMeta("Polygon", 4326, shapeField = "SHAPE")\

.cache()

com.esri.source.SourceSQL

Generate a static DataFrame from sql.

You can run SQL on files directly.

{{{

SELECT * FROM parquet.examples/src/main/resources/users.parquet

}}}

- If the input data has a shape column:

- Specify a geometryType, shapeField, and wkid. This will set the metadata of the shape column.

- If the shape column is WKT, then use ST_FromText on the shape column in the sql statement.

- If the shape column is WKB, then use ST_FromWKB on the shape column in the sql statement.

- If the shape column is shape struct, then no ST function is needed.

If the input data does not have shape struct column, do not provide geometryType, shapeField, and wkid. These values will default to None.

Capable of reading directly from a hive table.

Configuration

Required

- sql

- description: SQL to generate a static DataFrame or to read files directly.

- type: String

Optional

geometryType (v2.2)

- description: The geometry type to be read. For a standalone table, use None.

- type: String

- default value: None

shapeField (v2.2)

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

wkid (v2.2)

- description: The spatial reference id.

- type: String

- default value: 4326

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

SourceFGDB v2

Hocon

{

name = bgs

type = com.esri.source.SourceFGDB

path = "./src/test/resources/Redlands.gdb"

table = BlockGroups

sql = "SELECT TOTPOP_CY, Shape FROM BlockGroups WHERE ID = 060650301011"

geometryType = Polygon

geometryField = Shape

shapeField = SHAPE

wkid = 4326

cache = false

numPartitions = 4

}

Hocon

{

name = standalone

type = com.esri.source.SourceFGDB

path = "./src/test/resources/standalone_table.gdb"

table = standalone_table

sql = "SELECT * FROM standalone_table"

geometryType = None

}

# Python

# Deprecated as of v2.4. Use the Spark API instead.

spark.bdt.sourceFGDB(

"./src/test/resources/Redlands.gdb",

"Policy_25M",

"SELECT * FROM Policy_25M",

geometryType = "Point",

geometryField = "Shape",

shapeField = "SHAPE",

wkid = 4326,

cache = True,

numPartitions = 4)

# Spark API

spark\

.read\

.format("com.esri.gdb")\

.options(path="./src/test/resources/Redlands.gdb", name="Policy_25M")\

.load()\

.selectExpr("ST_FromFGDBPoint(Shape) as NEW_SHAPE", "*")\

.drop("Shape")\

.withColumnRenamed("NEW_SHAPE", "SHAPE")\

.withMeta("Point", 4326)\

.repartition(4)\

.cache()

com.esri.source.SourceFGDB

Read and create a DataFrame from the file geodatabase. [[SHAPE_STRUCT]] will be created when geometryType is not set to None. Unlike other sources, SourceFGDB sets the metadata on [[SHAPE_FIELD]] in the outgoing DataFrame.

Configuration

Required

path

- description: The path to the file geodatabase.

- type: String

table

- description: The name of the feature class or standalone table.

- type: String

sql

- description: Use SQL to select and/or filter fields. For the feature class, make sure to include the geometry field in the projection so that it can be turned into [[SHAPE_STRUCT]]. 'FROM' clause should use the table name specified with 'table' configuration.

- type: String

geometryType

- description: The geometry type to be read. For a standalone table, use None.

- type: String

Optional

geometryField

- description: The name of the geometry field in the feature class. Not applicable when geometryType is set to None.

- type: String

- default value: Shape

shapeField

- description: The name of the SHAPE struct field. Not applicable when geometryType is set to None.

- type: String

- default value: SHAPE

wkid

- description: The spatial reference id.

- type: Integer

- default value: 4326

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

numPartitions

- description: The number of partitions. If not specified, the default parallelism would be set.

- type: Integer

- default value: None

SourceSHP v2.2

Hocon

{

name = bgs

type = com.esri.source.SourceSHP

path = "./src/test/resources/Redlands.shp"

sql = "SELECT TOTPOP_CY, SHAPE FROM BlockGroups WHERE ID = 060650301011"

table = BlockGroups

geometryType = Polygon

shapeField = SHAPE

wkid = 4326

cache = false

numPartitions = 4

}

# Python

# Deprecated as of v2.4. Use the Spark API instead.

spark.bdt.sourceSHP(

"./src/test/resources/shp",

"Dev_Ops",

"select * from Dev_Ops",

"Point",

shapeField="SHAPE",

wkid=4326,

cache=True,

numPartitions=1)

# Spark API

spark\

.read\

.format("com.esri.spark.shp")\

.options(path="./src/test/resources/shp/", format="WKB")\

.load()\

.selectExpr("ST_FromWKB(shape) as SHAPE_NEW", "*")\

.drop("shape")\

.withColumnRenamed("SHAPE_NEW", "SHAPE")\

.withMeta("Point", 4326)\

.repartition(1)\

.cache()

com.esri.source.SourceSHP

Read and create a DataFrame from a shapefile. SourceSHP sets the metadata on [[SHAPE_FIELD]] in the outgoing DataFrame.

Configuration

Required

path

- description: The path to the file shapefile.

- type: String

table

- description: The name of the feature class or standalone table.

- type: String

sql

- description: Use SQL to select and/or filter fields. 'FROM' clause should use the table name specified with 'table' configuration.

- type: String

geometryType

- description: The geometry type to be read. Possible values are Point, Polyline, Polygon.

- type: String

Optional

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

wkid

- description: The spatial reference id.

- type: Integer

- default value: 4326

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

numPartitions

- description: The number of partitions. If not specified, the default parallelism would be set.

- type: Integer

- default value: None

SourceHive v2

Hocon

{

name = taxi

type = com.esri.source.SourceHive

properties = {

"hive.metastore.uris" = "thrift://127.0.0.1:9083"

}

preHQL = []

mainHQL = "SELECT * FROM taxi"

database = default

cache = false

numPartitions = 8

}

Hocon

# Example for shape column with WKT values

{

name = taxi

type = com.esri.source.SourceHive

properties = {

"hive.metastore.uris" = "thrift://127.0.0.1:9083"

}

preHQL = []

mainHQL = "SELECT ST_FromText(SHAPE) FROM taxi"

database = default

geometryType = point

shapeField = SHAPE

wkid = 4326

cache = false

numPartitions = 8

}

# Python

# Deprecated as of v2.4. Use the Spark API instead.

spark.bdt.sourceHive(

{"hive.metastore.uris": "thrift://127.0.0.1:9083"},

"SELECT ST_FromText(SHAPE) FROM taxi",

[],

"default",

geometryType = "Point",

shapeField = "SHAPE",

wkid = 4326,

cache = False,

numPartitions = 8)

com.esri.source.SourceHive

Read from hive storage.

- If the input data has a shape column:

- Specify a geometryType, shapeField, and wkid. This will set the metadata of the shape column.

- If the shape column is WKT, then use ST_FromText on the shape column in the preHQL or mainHQL statement.

- If the shape column is WKB, then use ST_FromWKB on the shape column in the preHQL or mainHQL statement.

- If the shape column is shape struct, then no ST function is needed.

If the input data does not have shape struct column, do not provide geometryType, shapeField, and wkid. These values will default to None.

https://stackoverflow.com/questions/53044191/spark-warehouse-vs-hive-warehouse

https://spark.apache.org/docs/latest/sql-data-sources-hive-tables.html

Configuration

Required

properties

- description: Hive properties

- type: Map[String, String]

mainHQL

- description: Hive QL

- type: String

Optional

preHQL

- description: A set of Hive QLs to execute before the main HQL.

- type: Array[String]

- default value: []

database

- description: The database to use

- type: String

- default value: default

geometryType (v2.2)

- description: The geometry type to be read. For a standalone table, use None.

- type: String

- default value: None

shapeField (v2.2)

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

wkid (v2.2)

- description: The spatial reference id.

- type: String

- default value: 4326

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

numPartitions

- description: Re-partitions the outgoing DataFrame.

- type: Int

- default value: None

Processors v2

ProcessorAddFields v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddFields

expressions = ["(A/B) as C"]

cache = false

}

# Python

# v2.2

spark.bdt.processorAddFields(

df,

["(A/B) as C"],

cache = False)

# v2.3

spark.bdt.addFields(

df,

["(A/B) as C"],

cache = False)

com.esri.processor.ProcessorAddFields

Add new fields using SQL expressions.

Configuration

Required

- expressions

- description: A Seq of SQL expressions.

- type: Seq[String]

Optional

cache

- description: Persist the outgoing DataFrame.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddMetadata v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddMetadata

geometryType = Point

wkid = 4326

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAddMetadata(

df,

"Point",

4326,

shapeField = "SHAPE",

cache = False)

# v2.3

df.withMeta(

"Point",

4326,

"SHAPE")

com.esri.processor.ProcessorAddMetadata

Set the geometry and spatial reference metadata on the [[SHAPE_STRUCT]] column.

Configuration

Required

geometryType

- description: The geometry type. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon.

- type: String

wkid

- description: The spatial reference id.

- type: Integer

Optional

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddShapeFromGeoJson v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddShapeFromGeoJson

geoJsonField = GEOJSON

geometryType = Point

wkid = 4326

keep = false

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAddShapeFromGeoJson(

df,

"GEOJSON",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.addShapeFromGeoJson(

df,

"GEOJSON",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddShapeFromGeoJson

Add the SHAPE column based on the configured GeoJson field. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

Consider using ST_FromGeoJSON

Configuration

Required

geoJsonField

- description: The name of the GeoJson field.

- type: String

geometryType

- description: The geometry type. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

- type: String

Optional

wkid

- description: The spatial reference id.

- type: Integer

- default value: 4326

keep

- description: Whether to retain the original GeoJson field or not.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE Struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddShapeFromJson v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddShapeFromJson

geometryType = Polygon

jsonField = JSON

keep = false

wkid = 4326

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAddShapeFromJson(

df,

"JSON",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.addShapeFromJson(

df,

"JSON",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddShapeFromJson

Add the SHAPE column based on the configured JSON field. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

Consider using ST_FromJson

Configuration

Required

jsonField

- description: The name of the JSON field.

- type: String

geometryType

- description: The geometry type. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

- type: String

Optional

wkid

- description: The spatial reference id.

- type: String

- default value: 4326

keep

- description: Whether to retain the original JSON field or not.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddShapeFromWKB v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddShapeFromWKB

wkbField = WKB

geometryType = Point

wkid = 4326

keep = false

shapeField = SHAPE

}

# Python

# v2.2

spark.bdt.processorAddShapeFromWKB(

df,

"WKB",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.addShapeFromWKB(

df,

"WKB",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddShapeFromWKB

Add the SHAPE column based on the configured WKB field. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

Consider using ST_FromWKB

Configuration

Required

wkbField

- description: The name of the WKB field.

- type: String

geometryType

- description: The geometry type. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

- type: String

Optional

wkid

- description: The spatial reference id.

- type: String

- default value: 4326

keep

- description: Whether to retain the original WKB field or not.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddShapeFromWKT v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddShapeFromWKT

wktField = WKT

geometryType = Point

wkid = 4326

keep = false

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAddShapeFromWKT(

df,

"WKT",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.addShapeFromWKT(

df,

"WKT",

"Point",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddShapeFromWKT

Add the SHAPE column based on the configured WKT field. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

Consider using ST_FromText

Configuration

Required

wktField

- description: The name of the WKT field.

- type: String

geometryType

- description: The geometry type. The possible values for geometryType are: Unknown, Point, Line, Envelope, MultiPoint, Polyline, Polygon, GeometryCollection.

- type: String

Optional

wkid

- description: The spatial reference id.

- type: String

- default value: 4326

keep

- description: Whether to retain the original WKT field or not.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddShapeFromXY v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddShapeFromXY

xField = x

yField = y

wkid = 4326

keep = false

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAddShapeFromXY(

df,

"x",

"y",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.addShapeFromXY(

df,

"x",

"y",

wkid = 4326,

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddShapeFromXY

Add SHAPE column based on the configured X field and Y field.

Consider using ST_FromXY

Configuration

Required

xField

- description: The name of the longitude field.

- type: String

yField

- description: The name of the latitude field.

- type: String

Optional

wkid

- description: The spatial reference id.

- type: String

- default value: 4326

keep

- description: Whether to retain the original xField, yField or not.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorAddWKT v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAddWKT

wktField = WKT

shapeField = SHAPE

keep = false

cache = false

}

# Python

# v2.2

spark.bdt.processorAddWKT(

df,

wktField = "WKT",

keep = False,

shapeField = "SHAPE",

cache = False)

# v2.2

spark.bdt.addWKT(

df,

wktField = "WKT",

keep = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAddWKT

Add a new field containing the well-known text representation.

Consider using ST_AsText

Configuration

Optional

wkt

- description: The name of the field holding well-known text.

- type: String

- defaultValue: wkt

keep

- description: Boolean flag whether to keep the SHAPE struct field or not. The default value is false.

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: Persist the outgoing DataFrame.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorArea v2.1

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorArea

areaField = shape_area

shapeField = SHAPE

cache = false

}

# Python

# 2.2

spark.bdt.processorArea(

df,

areaField = "shape_area",

shapeField = "SHAPE",

cache = False)

# 2.3

spark.bdt.area(

df,

areaField = "shape_area",

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorArea

Calculate the area of a geometry.

Consider using ST_Area

Configuration

Optional

areaField

- description: The name of the area column in the DataFrame

- type: String

- default value: shape_area

shapeField

- description: The name of the SHAPE Struct for DataFrame.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input DataFrame.

- type: String

- default value: _default

output

- description: The name of the output DataFrame.

- type: String

- default value: _default

ProcessorAssembler v2.2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorAssembler

targetIDFields = [ID1, ID2, ID3]

timeField = current_time

timeFormat = timestamp

separator = ":"

trackIDName = trackID

origMillisName = origMillis

destMillisName = destMillis

durationMillisName = durationMillis

numTargetsName = numTargets

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorAssembler(

df,

["ID1", "ID2", "ID3"],

"current_time",

"timestamp",

separator = ":",

trackIDName = "trackID",

origMillisName = "origMillis",

destMillisName = "destMillis",

durationMillisName = "durationMillis",

numTargetsName = "numTargets",

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.assembler(

df,

["ID1", "ID2", "ID3"],

"current_time",

"timestamp",

separator = ":",

trackIDName = "trackID",

origMillisName = "origMillis",

destMillisName = "destMillis",

durationMillisName = "durationMillis",

numTargetsName = "numTargets",

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorAssembler

Processor to assemble a set of targets (points) into a track (polyline). The user can specify a set of target fields that make up a track identifier and a timestamp field in the target attributes, such that all the targets with that same track identifier will be assembled chronologically as polyline vertices. The polyline consists of 3D points where x/y are the target location and z is the timestamp.

The output DataFrame will have the following columns: - shape: The shape struct that defines the track (polyline) - trackID: The track identifier. Typically, this is a concatenation of the string representation of the user specified target fields separated by a colon (:) - origMillis: The track starting epoch in milliseconds. - destMillis: The track ending epoch in milliseconds. - durationMillis: The track duration in milliseconds. - numTargets: The number of targets in the track.

Configuration

Required

targetIDFields

- desc: The combination of target attribute fields to use as a track identifier.

- type: Array[String]

timeField

- desc: The time field.

- type: String

timeFormat

- desc: The format of the time input. Has to be one of: long, date, timestamp

- type: String

optional

separator

- desc: The separator used to join the target id fields. The joined target id fields become the trackID.

- type: String

- defaultValue: ":"

trackIDName

- desc: The name of the output field to hold the track identifier as a polyline attribute.

- type: String

- defaultValue: trackID

origMillisName

- desc: The name of the output field to hold the track starting epoch in milliseconds as a polyline attribute.

- type: String

- defaultValue: origMillis

destMillisName

- desc: The name of the output field to hold the track ending epoch in milliseconds as a polyline attribute.

- type: String

- defaultValue: destMillis

durationMillisName

- desc: The name of the output field to hold the track duration in milliseconds as a polyline attribute.

- type: String

- defaultValue: durationMillis

numTargetsName

- desc: The name of the output field to hold the track number of targets in milliseconds as a polyline attribute.

- type: String

- defaultValue: numTargets

shapeField

- description: The name of the SHAPE Struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- desc: The input feature class name.

- type: String

- defaultValue: _default

output

- desc: The output feature class name

- type: String

- defaultValue: _default

ProcessorBuffer v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorBuffer

distance = 2.0

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorBuffer(

df,

2.0,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.buffer(

df,

2.0,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorBuffer

Buffer geometries by a radius.

Consider using ST_Buffer

Configuration

Required

- distance

- description: The buffer distance.

- type: Double

Optional

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: Persist the outgoing DataFrame.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorCentroid v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorCentroid

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorCentroid(

df,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.centroid(

df,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorCentroid

Produces centroids of features.

Consider using ST_Centroid

Configuration

Optional

shapeField

- description: The name of the SHAPE Struct field.

- type: String

- default value: SHAPE

cache

- description: Persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input DataFrame.

- type: String

- default value: _default

output

- description: The name of the output DataFrame.

- type: String

- default value: _default

ProcessorCountWithin v2.2

Hocon

{

inputs = [_default, _default]

output = _default

type = com.esri.processor.ProcessorCountWithin

cellSize = 1

keepGeometry = false

emitContainsOnly = false

doBroadcast = false

lhsShapeField = SHAPE

rhsShapeField = SHAPE

cache = false

extent = [-180.0, -90.0, 180.0, 90.0]

depth = 16

}

# Python

# v2.2

spark.bdt.processorCountWithin(

df1,

df2,

1.0,

keepGeometry = False,

emitContainsOnly = False,

doBroadcast = False,

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

# v2.3

spark.bdt.countWithin(

df1,

df2,

1.0,

keepGeometry = False,

emitContainsOnly = False,

doBroadcast = False,

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

com.esri.processor.ProcessorCountWithin

Processor to produce a count of all lhs features that are contained in a rhs feature.

If emitContainsOnly is set to true (the default), this processor will only output rhs features that contain at least one lhs feature. To get all rhs features in the output, emitContainsOnly must be set to false. This will include rhs features that contain 0 lhs features. IMPORTANT: There will be a non-trivial time cost if emitContainsOnly is set to false.

If keepGeometry is set to false, the output format will be [rhs attributes + lhs count]. This is the default. If keepGeometry is set to true, the output format will be [rhs shape + rhs attributes + lhs count].

For example, let's assume that

Polygon with attributes [NAME: A, ID: 1] contains 2 points. Polygon with attributes [NAME: A, ID: 2] contains 1 point.

With keepGeometry set to false, the output rows will be: [NAME: A, ID: 1, 2] [NAME: A, ID: 2, 1]

If doBroadcast is true and there are null values in the shape column of either of the input dataframes, a NullPointerException will be thrown. These null values should be handled before using this processor. If doBroadcast=false, then rows with null values for shape column are implicitly handled and will be dropped.

Cell size must not be smaller than the following values:

For Spatial Reference WGS 84 GPS: 0.000000167638064 degrees For Spatial Reference WGS 1984 Web Mercator: 0.0186264515 meters

Configuration

Required

- cellSize

- description: The spatial partitioning cell size.

- type: Double

Optional

keepGeometry

- description: To keep the rhs ShapeStruct in the output or not.

- type: Boolean

- default value: false

emitContainsOnly

- description: To only emit rhs geometries that contain lhs geometries.

- type: Boolean

- default value: false

doBroadcast

- description: If set to true, rhs DataFrame is broadcasted. Set

spark.sql.autoBroadcastJoinThresholdaccordingly. - type: Boolean

- default value: false

- description: If set to true, rhs DataFrame is broadcasted. Set

lhsShapeField

- description: The name of the SHAPE Struct field in the LHS DataFrame.

- type: String

- default value: SHAPE

rhsShapeField

- description: The name of the SHAPE Struct field in the RHS DataFrame.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

extent

- description: The spatial index extent. Array of the extent coordinates [xmin, ymin, xmax, ymax].

- type: Array[Double]

- default value: [-180.0, -90.0, 180.0, 90.0]

depth

- description: The spatial index depth.

- type: Integer

- default value: 16

inputs

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDifference v2.2

Hocon

{

inputs = [blockgroups, zipcodes]

output = _default

type = com.esri.processor.ProcessorDifference

cellSize = 0.1

doBroadcast = false

lhsShapeField = SHAPE

rhsShapeField = SHAPE

cache = false

extent = [-180.0, -90.0, 180.0, 90.0]

depth = 16

}

# Python

# v2.2

spark.bdt.processorDifference(

df1,

df2,

0.1,

doBroadcast = False,

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

# v2.3

spark.bdt.difference(

df1,

df2,

0.1,

doBroadcast = False,

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

com.esri.processor.combine.ProcessorDifference

Per each left hand side (lhs) feature, find all relevant right hand side (rhs) features and take the set-theoretic difference of each rhs feature with the lhs feature. For the LHS feature type, it can be point, polyline, polygon types. For the RHS feature type, it can be point, polyline, polygon types.

When n relevant rhs features are found, the lhs feature is emitted n times, and the lhs geometry is replaced with the difference of the lhs geometry and rhs geometry. When a lhs feature has no overlapping rhs features, nothing is emitted. The length output table could be less than the amount of input lhs features.

The final schema is: lhs attributes + [[SHAPE_FIELD]] + rhs attributes.

Cell size must not be smaller than the following values:

For Spatial Reference WGS 84 GPS: 0.000000167638064 degrees For Spatial Reference WGS 1984 Web Mercator: 0.0186264515 meters

Configuration

Required

- cellSize

- desc: The spatial partitioning grid size

- type: Double

Optional

doBroadcast

- description: If set to true, rhs DataFrame is broadcasted. Set

spark.sql.autoBroadcastJoinThresholdaccordingly. - type: Boolean

- default value: false

- description: If set to true, rhs DataFrame is broadcasted. Set

lhsShapeField

- description: The name of the SHAPE Struct field in the LHS DataFrame.

- type: String

- default value: SHAPE

rhsShapeField

- description: The name of the SHAPE Struct field in the RHS DataFrame.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

extent

- description: The spatial index extent. Array of the extent coordinates [xmin, ymin, xmax, ymax].

- type: Array[Double]

- default value: [-180.0, -90.0, 180.0, 90.0]

depth

- desc: The spatial index depth

- type: Integer

- default value: 16

inputs

- description: The names of the input DataFrames.

- type: Array[String]

- default value: []

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDissolve v2

Hocon

{

type = com.esri.processor.ProcessorDissolve

fields = [TYPE]

singlePart = false

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorDissolve(

df,

fields = ["TYPE"],

singlePart = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.dissolve(

df,

fields = ["TYPE"],

singlePart = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorDissolve

Dissolve features either by both attributes and spatially or spatially only.

Performs the topological union operation on two geometries.

The supported geometry types are multiPoint, polyline and polygon.

singlePart indicates whether the returned geometry is multipart or not.

The output schema is: fields + SHAPE Struct.

Configuration

Optional

fields

- description: The collection of field names to dissolve by.

- type: Array[String]

- default value: []

singlePart

- description: Indicates whether the returned geometry is Multipart or not.

- type: Boolean

- default value: true

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: true

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDissolve2 v2

Hocon

{

type = com.esri.processor.ProcessorDissolve2

fields = [TYPE]

singlePart = true

sort = false

shapeField = SHAPE

cache = false

}

# Python

# v2.2

spark.bdt.processorDissolve2(

df,

fields = ["TYPE"],

singlePart = True,

sort = False,

shapeField = "SHAPE",

cache = False)

# v2.3

spark.bdt.dissolve2(

df,

fields = ["TYPE"],

singlePart = True,

sort = False,

shapeField = "SHAPE",

cache = False)

com.esri.processor.ProcessorDissolve2

This implementation is the same as ProcessorDissolve in BDT 1.0.

Dissolve features by both attributes and spatially or spatially only.

Performs the topological union operation on two geometries.

The supported geometry types are multipoint, polyline and polygon.

singlePart indicates whether the returned geometry is multipart or not.

The output schema is: fields + SHAPE Struct.

Configuration

Optional

fields

- description: The collection of field names to dissolve by.

- type: Array[String]

- default value: []

singlePart

- description: Indicates whether the returned geometry is Multipart or not.

- type: Boolean

- default value: true

sort

- description: Sort by the fields or not

- type: Boolean

- default value: false

shapeField

- description: The name of the SHAPE struct field.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDistance v2.1

Hocon

{

inputs = [blockgroups, zipcodes]

output = _default

type = com.esri.processor.ProcessorDistance

cellSize = 0.1

radius = 0.003

emitEnrichedOnly = false

doBroadcast = false

distanceField = distance

lhsShapeField = SHAPE

rhsShapeField = SHAPE

cache = false

extent = [-180.0, -90.0, 180.0, 90.0]

}

# Python

# v2.2

spark.bdt.processorDistance(

df1,

df2,

0.1,

0.003,

emitEnrichedOnly = True,

doBroadcast = False,

distanceField = "distance",

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

# v2.3

spark.bdt.distance(

df1,

df2,

0.1,

0.003,

emitEnrichedOnly = True,

doBroadcast = False,

distanceField = "distance",

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

com.esri.processor.ProcessorDistance

Find a target feature within the search distance for a source feature.

Per each left hand side (lhs) feature, find the closest right hand side (rhs) feature within the specified radius. For the LHS feature type, it can be point, polyline, polygon types. For the RHS feature type, it can be point, polyline, polygon types.

When the lhs and rhs feature types are point, the distance is in meters. When a lhs feature has no rhs features within the specified radius, that lhs feature is emitted with null values for rhs attributes.

The final schema is: lhs attributes + [[SHAPE_FIELD]] + rhs attributes + distance.

Extent must be specified when using a projected spatial reference. The default extent assumes world geographic (WGS84/4326).

If doBroadcast is true and there are null values in the shape column of either of the input dataframes, a NullPointerException will be thrown. These null values should be handled before using this processor. If doBroadcast=false, then rows with null values for shape column are implicitly handled and will be dropped.

Cell size must not be smaller than the following values:

For Spatial Reference WGS 84 GPS: 0.000000167638064 degrees For Spatial Reference WGS 1984 Web Mercator: 0.0186264515 meters

Consider using ST_Distance

Configuration

Required

cellSize

- description: The spatial partitioning cell size. note: a value is required but cellSize is ignored when doBroadcast = True

- type: Double

radius

- description: The search radius in units of the spatial reference system.

- type: Double

Optional

emitEnrichedOnly (v2.1)

- description: When true, LHS records that have nearest RHS records will be emitted. LHS records with null values as RHS attribute won't be emitted.

- type: Boolean

- default value: true (note: hocon default is false)

doBroadcast

- description: If set to true, rhs DataFrame is broadcasted. Set

spark.sql.autoBroadcastJoinThresholdaccordingly. - type: Boolean

- default value: false

- description: If set to true, rhs DataFrame is broadcasted. Set

distanceField

- description: The name of the distance field to be added.

- type: String

- default value: distance

lhsShapeField

- description: The name of the SHAPE Struct field in the LHS DataFrame.

- type: String

- default value: SHAPE

rhsShapeField

- description: The name of the SHAPE Struct field in the RHS DataFrame.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

extent

- description: The spatial index extent. Array of the extent coordinates [xmin, ymin, xmax, ymax].

- type: Array[Double]

- default value: [-180.0, -90.0, 180.0, 90.0]

depth

- description: The spatial index depth.

- type: Integer

- default value: 16

inputs

- description: The names of the input DataFrames.

- type: Array[String]

- default value: []

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDistanceAllCandidates v2.1

Hocon

{

inputs = [_default, _default]

output = _default

type = com.esri.processor.ProcessorDistanceAllCandidates

cellSize = 1

radius = 1

emitEnrichedOnly = true

doBroadcast = false

distanceField = distance

lhsShapeField = SHAPE

rhsShapeField = SHAPE

cache = false

extent = [-180.0, -90.0, 180.0, 90.0]

depth = 16

}

# Python

# v2.2

spark.bdt.processorDistanceAllCandidates(

df1,

df2,

1.0,

1.0,

emitEnrichedOnly = True,

doBroadcast = False,

distanceField = "distance",

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

# v2.3

spark.bdt.distanceAllCandidates(

df1,

df2,

1.0,

1.0,

emitEnrichedOnly = True,

doBroadcast = False,

distanceField = "distance",

lhsShapeField = "SHAPE",

rhsShapeField = "SHAPE",

cache = False,

extentList = [-180.0, -90.0, 180.0, 90.0],

depth = 16)

com.esri.processor.ProcessorDistanceAllCandidates

Processor to find all features from the right hand side dataset within a given search distance.

Enriches lhs features with the attributes of rhs features found within the specified distance.

If emitEnrichedOnly is set to false, when no rhs features are found, a lhs feature is enriched with null values.

When emitEnrichedOnly is set to true, when no rhs features are found, the lhs feature is not emitted in the output.

The distance between the lhs and corresponding rhs geometry is appended to the end of each row. When the input sources are points, the distance is calculated in meters.

When there are multiple right hand side features (A,B,C) are found within the specified distance for a left hand side feature (X), the output would be:

X,A

X,B

X,C

If doBroadcast is true and there are null values in the shape column of either of the input dataframes, a NullPointerException will be thrown. These null values should be handled before using this processor. If doBroadcast=false, then rows with null values for shape column are implicitly handled and will be dropped.

Cell size must not be smaller than the following values:

For Spatial Reference WGS 84 GPS: 0.000000167638064 degrees For Spatial Reference WGS 1984 Web Mercator: 0.0186264515 meters

Configuration

Required

cellSize

- description: The spatial partitioning cell size. note: a value is required but cellSize is ignored when doBroadcast = True

- type: Double

radius

- description: The search radius in units of the spatial reference system.

- type: Double

Optional

emitEnrichedOnly

- description: When true, LHS records that have nearest RHS records will be emitted. LHS records with null values as RHS attribute won't be emitted.

- type: Boolean

- default value: true

doBroadcast

- description: If set to true, rhs DataFrame is broadcasted. Set

spark.sql.autoBroadcastJoinThresholdaccordingly. - type: Boolean

- default value: false

- description: If set to true, rhs DataFrame is broadcasted. Set

distanceField

- description: The name of the distance field to be added.

- type: String

- default value: distance

lhsShapeField

- description: The name of the SHAPE Struct field in the LHS DataFrame.

- type: String

- default value: SHAPE

rhsShapeField

- description: The name of the SHAPE Struct field in the RHS DataFrame.

- type: String

- default value: SHAPE

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

extent

- description: The spatial index extent. Array of the extent coordinates [xmin, ymin, xmax, ymax].

- type: Array[Double]

- default value: [-180.0, -90.0, 180.0, 90.0]

depth

- description: The spatial index depth.

- type: Integer

- default value: 16

inputs

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorDropFields v2

Hocon

{

input = _default

output = _default

type = com.esri.processor.ProcessorDropFields

fields = ["tip_amount", "total_amount"]

}

# Python

# v2.2

spark.bdt.processorDropFields(

df,

["tip_amount", "total_amount"],

cache = False)

# v2.3

spark.bdt.dropFields(

df,

["tip_amount", "total_amount"],

cache = False)

com.esri.processor.ProcessorDropFields

Drop the configured fields.

Configuration

Required

- fields

- description: The collection of field names to drop.

- type: Array[String]

- default value: []

Optional

cache

- description: To persist the outgoing DataFrame or not.

- type: Boolean

- default value: false

input

- description: The name of the input.

- type: String

- default value: _default

output

- description: The name of the output.

- type: String

- default value: _default

ProcessorEliminatePolygonPartArea v2.3

{

input = _default

output = _default

type = com.esri.processor.ProcessorEliminatePolygonPartArea

areaThreshold = 1.0

shapeField = SHAPE

cache = false

}

# Python

# v2.3

spark.bdt.eliminatePolygonPartArea(

df,

1.0,

shapeField = "SHAPE",

cache = False)