Visualize H3 Binning in ArcGIS Pro#

Table of Contents#

Part 1: Overview#

This notebook will first walk through the process of acquiring raw point data and converting the points into a feature class to visualize in ArcGIS Pro. It will then show how to read the point feature class back into a Spark DataFrame, perform binning on the points based on H3 hexagons, and visulize the final results again in ArcGIS Pro.

Note: This notebook is intended to be run in an ArcGIS Pro notebook environment. Some concepts such as H3 binning can be broadly applicable but functions that convert to and from feature classes must be run in ArcGIS Pro.

[1]:

# Setup Spark for use in ArcGIS Pro

from spark_esri import spark_start, spark_stop

spark_stop()

config = {

"spark.driver.memory":"4G",

"spark.kryoserializer.buffer.max":"2024",

"spark.jars": r"C:\<path-to-bdt-jar>\bdt-3.4.0-3.5.1-2.12.jar",

"spark.submit.pyFiles": r"C:\<path-to-bdt-zip>\bdt-3.4.0.zip"

}

spark = spark_start(config=config)

[2]:

import bdt

from bdt import functions as F

from bdt import processors as P

from pyspark.sql.functions import count, cast, col

bdt.auth("bdt.lic")

BDT has been successfully authorized!

Welcome to

___ _ ___ __ ______ __ __ _ __

/ _ ) (_) ___ _ / _ \ ___ _ / /_ ___ _ /_ __/ ___ ___ / / / /__ (_) / /_

/ _ | / / / _ `/ / // // _ `// __// _ `/ / / / _ \/ _ \ / / / '_/ / / / __/

/____/ /_/ \_, / /____/ \_,_/ \__/ \_,_/ /_/ \___/\___//_/ /_/\_\ /_/ \__/

/___/

BDT python version: v3.4.0-v3.4.0

BDT jar version: v3.4.0-v3.4.0

Part 2: Data Preparation#

The point data used in this notebook is AIS data from the Marine Cadastre. It can be downloaded from their website: https://marinecadastre.gov/ais/ This data comes from location transmissions from vessels in U.S. and international waters.

First, read the raw AIS data from CSV. Then filter the data to a smaller area. Finally, convert the LON and LAT values to the BDT Shape Struct.

[3]:

ais_sf = (

spark

.read

.option("header", True)

.csv(r"C:\\<path-to-raw-AIS-data>\\AIS_2023_09_30.csv\") # Read the raw AIS data from CSV")

.where("LON > -123.1938172 AND LON < -121.6745364 AND LAT > 37.4196147 AND LAT < 38.3495510") # Filter to SF Bay Area

.select("MMSI",

"LON",

"LAT",

F.st_makePoint("LON", "LAT").alias("SHAPE")) # Convert to BDT Shape

)

ais_sf.printSchema()

ais_sf.show()

root

|-- MMSI: string (nullable = true)

|-- LON: string (nullable = true)

|-- LAT: string (nullable = true)

|-- SHAPE: struct (nullable = true)

| |-- WKB: binary (nullable = true)

| |-- XMIN: double (nullable = true)

| |-- YMIN: double (nullable = true)

| |-- XMAX: double (nullable = true)

| |-- YMAX: double (nullable = true)

+---------+----------+--------+--------------------+

| MMSI| LON| LAT| SHAPE|

+---------+----------+--------+--------------------+

|367436230|-122.26363|37.73602|{[01 01 00 00 00 ...|

|338451372|-122.66533|37.78141|{[01 01 00 00 00 ...|

|366924720|-122.36720|37.92258|{[01 01 00 00 00 ...|

|211778580|-122.48282|37.49167|{[01 01 00 00 00 ...|

|366971280|-122.37741|37.66401|{[01 01 00 00 00 ...|

|366963980|-122.38916|37.80751|{[01 01 00 00 00 ...|

|368063440|-122.28080|37.79395|{[01 01 00 00 00 ...|

|366864850|-122.41172|37.81006|{[01 01 00 00 00 ...|

|366983830|-122.28244|37.79284|{[01 01 00 00 00 ...|

|368302170|-122.34451|37.78415|{[01 01 00 00 00 ...|

|368035480|-122.36745|37.90637|{[01 01 00 00 00 ...|

|367389840|-122.36744|37.92201|{[01 01 00 00 00 ...|

|366969740|-121.94167|38.05137|{[01 01 00 00 00 ...|

|368053730|-122.26303|38.10011|{[01 01 00 00 00 ...|

|367799820|-122.28531|37.79255|{[01 01 00 00 00 ...|

|367425520|-122.39645|37.89425|{[01 01 00 00 00 ...|

|368018070|-122.30004|37.77152|{[01 01 00 00 00 ...|

|367469070|-122.31496|37.86832|{[01 01 00 00 00 ...|

|366998510|-122.07680|38.05830|{[01 01 00 00 00 ...|

|563025600|-122.33118|37.81289|{[01 01 00 00 00 ...|

+---------+----------+--------+--------------------+

only showing top 20 rows

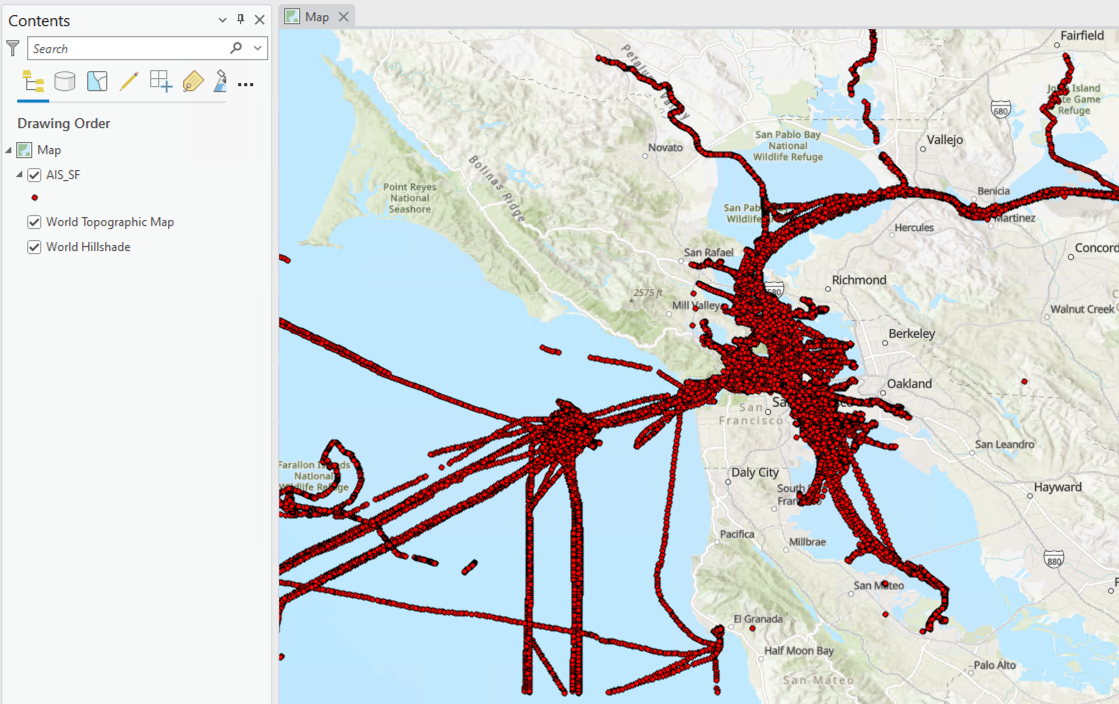

Part 3: Visualize Point Data#

Utilize the

to_feature_classBDT processor to save the filtered down AIS data to a feature class in the scratch workspace in ArcGIS Pro.Note: The

to_feature_classfunction will only work in an ArcGIS Pro notebook

[4]:

P.to_feature_class(ais_sf,

feature_class_name="AIS_SF",

wkid=4326,

workspace="scratch",

shape_field="SHAPE")

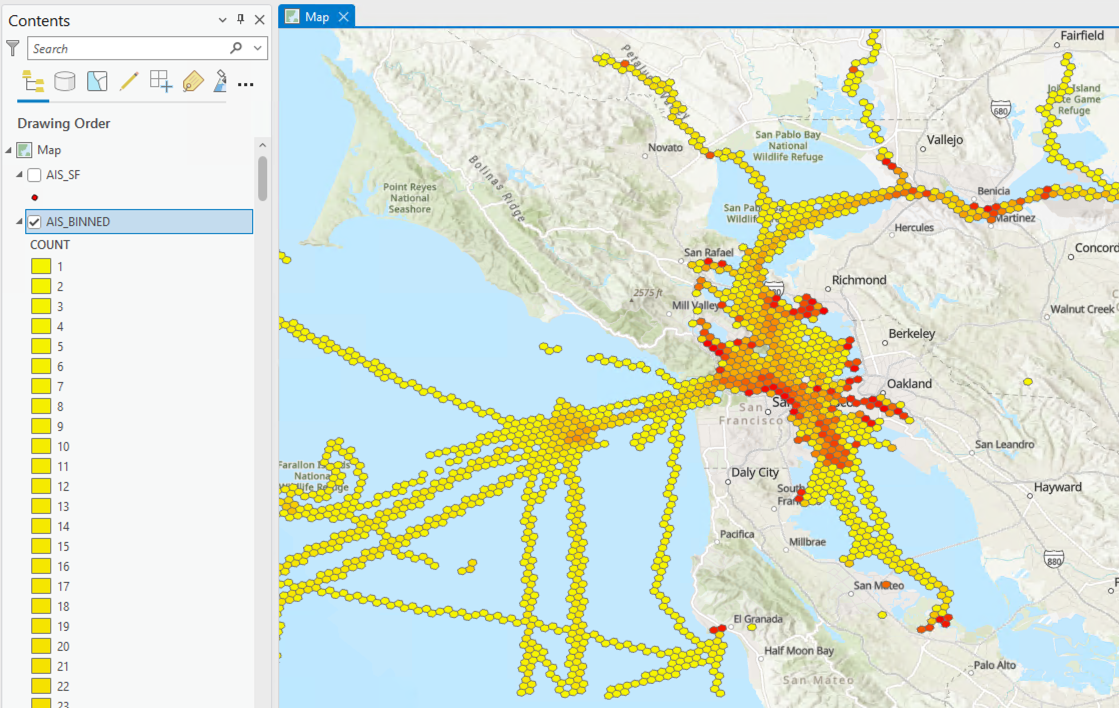

Looking at the Map in ArcGIS Pro, the point data is visualized.

Part 4: Bin Points Into H3 Hexes#

Next, utilize the

from_feature_classBDT processor to convert the feature class created in the last step back to a Spark DataFrame for H3 binning.

from_feature_classreturns the geometry column in WKB format, convert the WKB to the BDT Shape Struct withst_fromWKB.

[5]:

df = P.from_feature_class("AIS_SF")

df = df.withColumn("SHAPE", F.st_fromWKB("Shape"))

df.printSchema()

df.show()

root

|-- OBJECTID: long (nullable = true)

|-- SHAPE: struct (nullable = true)

| |-- WKB: binary (nullable = true)

| |-- XMIN: double (nullable = true)

| |-- YMIN: double (nullable = true)

| |-- XMAX: double (nullable = true)

| |-- YMAX: double (nullable = true)

|-- MMSI: string (nullable = true)

|-- LON: string (nullable = true)

|-- LAT: string (nullable = true)

+--------+--------------------+---------+----------+--------+

|OBJECTID| SHAPE| MMSI| LON| LAT|

+--------+--------------------+---------+----------+--------+

| 1|{[01 01 00 00 00 ...|367436230|-122.26363|37.73602|

| 2|{[01 01 00 00 00 ...|338451372|-122.66533|37.78141|

| 3|{[01 01 00 00 00 ...|366924720|-122.36720|37.92258|

| 4|{[01 01 00 00 00 ...|211778580|-122.48282|37.49167|

| 5|{[01 01 00 00 00 ...|366971280|-122.37741|37.66401|

| 6|{[01 01 00 00 00 ...|366963980|-122.38916|37.80751|

| 7|{[01 01 00 00 00 ...|368063440|-122.28080|37.79395|

| 8|{[01 01 00 00 00 ...|366864850|-122.41172|37.81006|

| 9|{[01 01 00 00 00 ...|366983830|-122.28244|37.79284|

| 10|{[01 01 00 00 00 ...|368302170|-122.34451|37.78415|

| 11|{[01 01 00 00 00 ...|368035480|-122.36745|37.90637|

| 12|{[01 01 00 00 00 ...|367389840|-122.36744|37.92201|

| 13|{[01 01 00 00 00 ...|366969740|-121.94167|38.05137|

| 14|{[01 01 00 00 00 ...|368053730|-122.26303|38.10011|

| 15|{[01 01 00 00 00 ...|367799820|-122.28531|37.79255|

| 16|{[01 01 00 00 00 ...|367425520|-122.39645|37.89425|

| 17|{[01 01 00 00 00 ...|368018070|-122.30004|37.77152|

| 18|{[01 01 00 00 00 ...|367469070|-122.31496|37.86832|

| 19|{[01 01 00 00 00 ...|366998510|-122.07680|38.05830|

| 20|{[01 01 00 00 00 ...|563025600|-122.33118|37.81289|

+--------+--------------------+---------+----------+--------+

only showing top 20 rows

Bin the data by H3 Hexagon:

Assign each point its H3 Index with

geoToH3.Group by the H3 Index to group together all points in the same H3 hexagon.

Use

countto aggregate the number of points in each hexagon.Construct the H3 Hexagon geometry from the H3 Index with

h3ToGeoBoundaryandst_makePolygon.

[6]:

binned = (

df

.withColumn("H3_IDX", F.geoToH3("SHAPE.YMIN", "SHAPE.XMIN", 8)) # Get the H3 Index for each point at H3 resolution 8

.groupBy("H3_IDX") # Group points in the same H3 hexagon

.agg(

count("*").alias("COUNT"), # Aggregate the number of points in each Hexagon

)

.withColumn("SHAPE", F.st_makePolygon(F.h3ToGeoBoundary("H3_IDX"))) # Convert from H3 Index to hexagon geometry

)

binned.printSchema()

binned.show()

root

|-- H3_IDX: long (nullable = true)

|-- COUNT: long (nullable = false)

|-- SHAPE: struct (nullable = true)

| |-- WKB: binary (nullable = true)

| |-- XMIN: double (nullable = true)

| |-- YMIN: double (nullable = true)

| |-- XMAX: double (nullable = true)

| |-- YMAX: double (nullable = true)

+------------------+-----+--------------------+

| H3_IDX|COUNT| SHAPE|

+------------------+-----+--------------------+

|613196571579777023| 63|{[01 06 00 00 00 ...|

|613196571586068479| 99|{[01 06 00 00 00 ...|

|613196885806546943| 2|{[01 06 00 00 00 ...|

|613196896420233215| 12|{[01 06 00 00 00 ...|

|613196569748963327| 118|{[01 06 00 00 00 ...|

|613196896816594943| 11|{[01 06 00 00 00 ...|

|613196903001096191| 18|{[01 06 00 00 00 ...|

|613196902925598719| 16|{[01 06 00 00 00 ...|

|613196556859867135| 6|{[01 06 00 00 00 ...|

|613196819911933951| 3|{[01 06 00 00 00 ...|

|613196912023044095| 3|{[01 06 00 00 00 ...|

|613196900205592575| 2|{[01 06 00 00 00 ...|

|613196569702825983| 267|{[01 06 00 00 00 ...|

|613196571193901055| 35|{[01 06 00 00 00 ...|

|613196541582114815| 33|{[01 06 00 00 00 ...|

|613196575123963903| 2|{[01 06 00 00 00 ...|

|613196571550416895| 45|{[01 06 00 00 00 ...|

|613196820266352639| 15|{[01 06 00 00 00 ...|

|613196900104929279| 2|{[01 06 00 00 00 ...|

|613196896292306943| 1|{[01 06 00 00 00 ...|

+------------------+-----+--------------------+

only showing top 20 rows

Part 5: Visualize Bins#

Before visualization, cast the

H3_IDXto string. This must be done since ArcGIS Pro does not support storing Long values over 53 bits and most H3 indexes are over 53 bits.Then, utilize the

to_feature_classBDT processor again to save the H3 binned AIS data to a feature class in the scratch workspace in ArcGIS Pro.

[7]:

P.to_feature_class(binned.select(col("H3_IDX").cast("string"), "COUNT", "SHAPE"), # Pro only supports up to 53 bit longs

feature_class_name="AIS_BINNED",

wkid=4326,

workspace="scratch",

shape_field="SHAPE")

In ArcGIS Pro, the symbology color can be customized to reflect the number of points binned into each hexagon. The result is a heat map of vessel activity in the San Francisco bay area.